Snowflake Arctic, an open-source, efficient, and cost-effective LLM, is set to take on Llama and Mixtral

It's delivering better performance than most available LLMs

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

When it comes to open-source LLMs (Large Language Models), the options are rather limited. But with Snowflake unveiling Arctic, things are about to get exciting!

Branded as the Best LLM for Enterprise AI, Arctic hits the bull’s eye in two aspects: low training cost and high training efficiency.

Arctic’s announcement blog reads,

Arctic offers top-tier enterprise intelligence among open source LLMs, and it does so using a training compute budget of roughly under $2 million (less than 3K GPU weeks). This means Arctic is more capable than other open source models trained with a similar compute budget. The high training efficiency of Arctic also means that Snowflake customers and the AI community at large can train custom models in a much more affordable way.

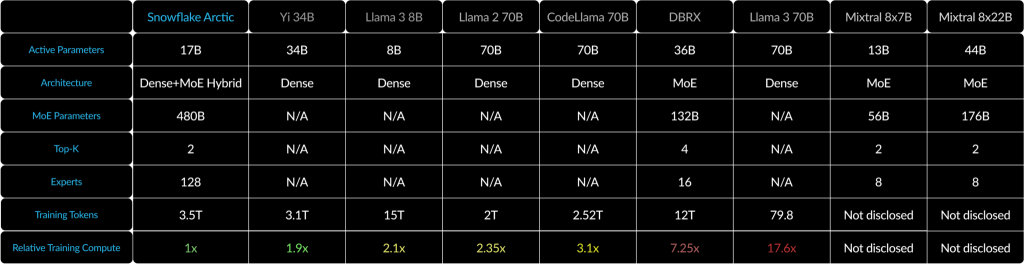

As per the data shared by Snowflake, Arctic delivers better performance at a lower computing power and cost than LLAMA 3 and Mixtral 8x7B.

To achieve relatively high training efficiency, Arctic uses the Dense-MoE Hybrid transformer architecture, which provides 480B MoE Parameters and 17B Active Parameters.

Coming to the equally critical Inference Efficiency in the Arctic LLM, Snowflake partnered with NVIDIA to achieve a throughput of 70+ tokens per second with a batch size of 1. This finds several mentions in the blog.

We have collaborated with NVIDIA and worked with NVIDIA TensorRT-LLM and the vLLM teams to provide a preliminary implementation of Arctic for interactive inference.

You can currently access Snowflake’s Arctic LLM on Hugging Face or the GitHub repository. In the coming days, it will be available on Amazon Web Serice (AWS), Lamini, Microsoft Azure, NVIDIA API Catalog, Perplexity, Replicate, and Together AI.

There have been significant improvements in the LLM landscape over the last few years, Arctic being a prime example of how much we have accomplished. And with corporations investing heavily in AI, the future looks promising!

What’s your first thought about Snowflake’s Arctic LLM? Share with our readers in the comments section.

User forum

0 messages