The Phi-3 family can be fine-tuned now, announces Microsoft

They can be used to create in-house AI models.

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

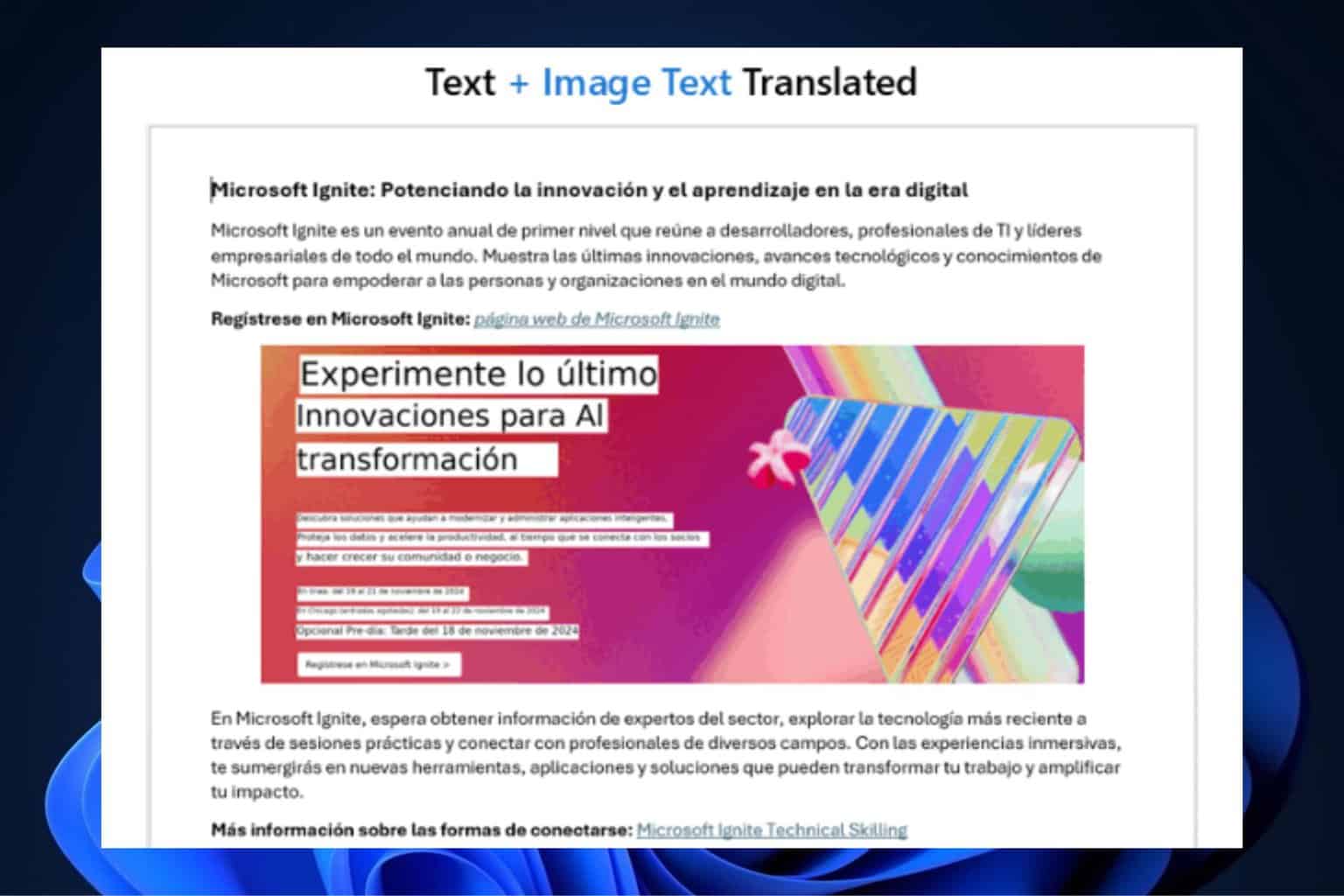

Microsoft allows for fine-tuning the Phi-3-mini and Phi-3-medium models on Azure. This advancement is a considerable leap in personalizing and using AI technologies.

Phi-3 Family: Microsoft introduced the Phi-3 family into Small Language Models (SLMs) in April. With great performance at low cost and latency, these models have established a fresh level of quality for SLMs. The Phi-3-mini, comprising a 3.8B language model, and the Phi-3-medium, which holds a 14B language model, come in two context-length alternatives each, offering adaptability to different uses.

The most recent announcement states that developers can make precise adjustments to these models on Azure. This means they can improve the performance of their AI models in different situations. For example, you might picture fine-tuning the Phi-3-medium model for student tutoring or creating a chat app with an exact tone or style of response.

According to the official blog, the options are limitless, and significant organizations such as Khan Academy already use the Phi-3 model in AI applications they have made available to everyone. In addition, Microsoft has announced the general availability of Models-as-a-Service (serverless endpoint) capability. This allows us to access the Phi-3-small model through a serverless endpoint.

This is very interesting because it simplifies the process of building AI applications, removing worries about infrastructure and other concerns. Also, soon, we will have good news that Phi-3-vision – a multi-modal model – can also be accessed via a serverless endpoint.

Just one month ago, Microsoft updated its Phi-3-mini model. After this update, there was a significant change in performance: according to industry benchmarks, the Phi-3-mini-4k now scores 35.8 (before it was 21.7), while the Phi-3-mini-128k scores 37.6 (it used to be 25.7).

You can find out more here.

User forum

0 messages