Microsoft researchers discovered Generative Kaleidoscopic Networks, and it can drastically enhance AI's creativity

Generative Kaleidoscopic Networks can be observed in other deep-learning architectures.

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

In a recent research paper published by Microsoft, AI researchers funded by the Redmond-based tech giant discovered the Generative Kaleidoscopic Networks, a new concept that can drastically enhance AI’s creativity, and it might bring it a step closer to reaching AGI.

Harsh Shrivastava, the one behind the research, found out that Deep ReLU networks (also known as Multilayer Perceptron architecture) exhibit an interesting behavior called over-generalization.

We discovered that the Deep ReLU networks demonstrate an ‘over-generalization’ phenomenon. In other words, the neural networks learn a many-to-one mapping and this effect is more prominent as we increase the number of layers or depth of the Multilayer Perceptron architecture . We utilize this property of neural networks to design a dataset kaleidoscope, termed as ‘Generative Kaleidoscopic Networks’.

In simpler terms, these networks tend to learn a many-to-one mapping, which is an algorithm where multiple related entities share a common association.

This effect became more pronounced as the researcher added more layers or depth to the network. Ultimately, by using this property, the researcher was able to create a new concept called Generative Kaleidoscopic Networks.

These networks act like a dataset kaleidoscope, where a model can be trained from an input in a kaleidoscope manner. That means it will automatically apply the mapping function repeatedly until certain samples from the input can be observed.

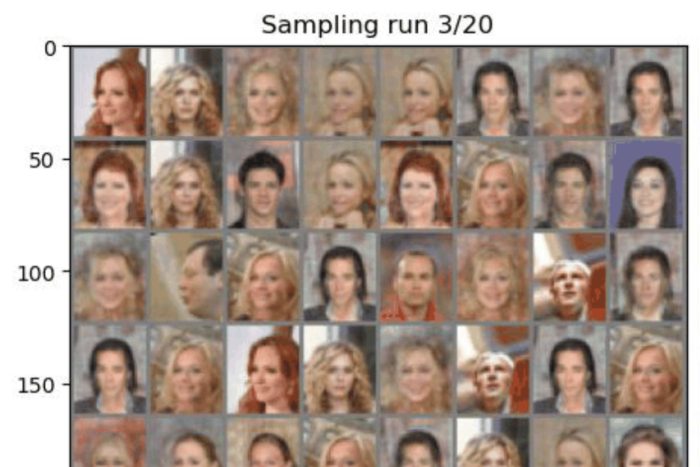

Harsh Shrivastava discovered that the deeper the model, the higher the quality of recovered samples, and the researcher tried it with many examples, which you can see on GitHub: in one stance, the model was given 1000 images of dogs, and in another stance, 1000 images of celebrities. In both cases, the model was able to generate its images, although some results proved to have some issues.

Generative Kaleidoscopic Networks are actively observed in other deep-learning architectures like CNNs, Transformers, and U-Nets, and researchers are investigating these aspects, as well.

With enough research, the Generative Kaleidoscopic Networks can allow the exploration of neural network behavior and it could lead to enhancing AI with great creative capabilities.

A brief discussion about the research can be found here, and the full paper can be read here. You can also check out GitHub if you want to find out more about how these networks function.