OpenAI's new GPT-4o can have natural voice conversations and talk to another AI

GPT-4o sounds a lot more human than ever before

5 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

Yeah, I know! We were all expecting GPT-5, but OpenAI has launched GPT-4o instead. Actually, we’re not bothered at all because the new addition is extremely exciting to say the least. It is a model that promises to make our interactions with AI more conversational and intuitive than ever before.

Imagine having a chat with an AI that not only understands the nuances of human language but can also interpret visual cues and respond with a level of empathy and intelligence that feels almost human. This is what GPT-4o brings to the table, and it’s not just for the tech-savvy. Whether you’re using the free version of ChatGPT or the premium one, there’s something in store for everyone.

What is new about GPT-4o?

First, the o (it’s not a zero, it’s the letter O) suffix stands for omni, which in turn is short for ominous, to describe the more complex human interaction.

If you’re using the free version of ChatGPT, you’re in for a treat. The new GPT-4o has an enhanced user interface, making conversations flow more naturally. No more feeling like you’re talking to a robot. Instead, you’ll find the chatbot more engaging, capable of handling a broader range of data, and providing responses that feel tailored to you.

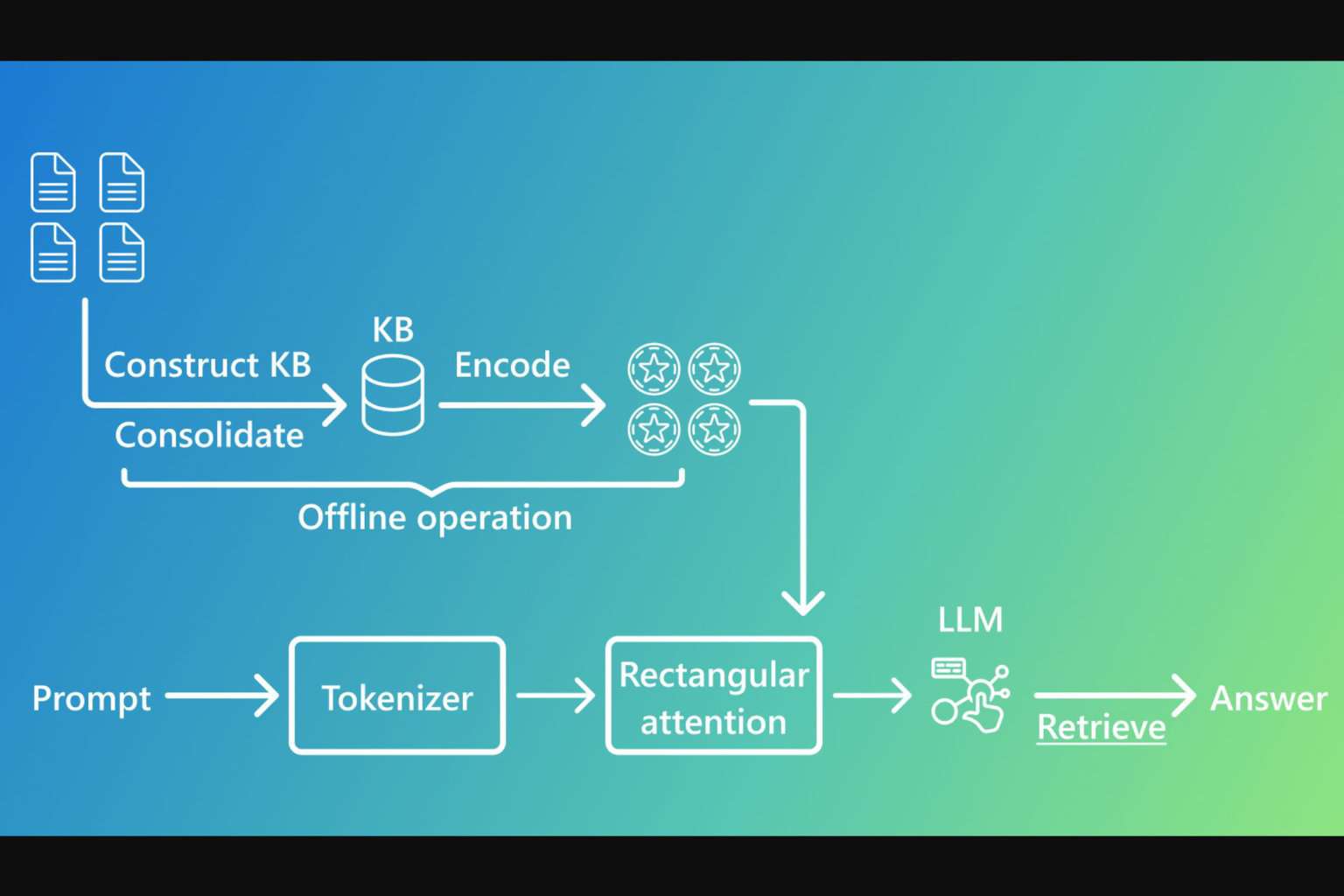

Basically, you can now interact with ChatGPT through voice commands, and it’s smart enough to understand and respond to a range of emotions and speeds. But that’s not all. With its vision capabilities, GPT-4o can see through your phone’s camera, helping you solve visual problems in real-time. Think of it as Google Lens, but supercharged.

However, the most intriguing application of GPT-4o is that it can talk to another AI. In OpenAI’s demonstration video, two models are installed on two different phones, gossiping about the real world. So, prepare for a slew of YouTube and TikTok clips with random chats about anything and everything.

The cherry on top? GPT-4o can sing. We’re not in for real music albums here, but the option sounds fun at least.

OpenAI made GPT-4o available in 50 languages and introduced a desktop app, making it easier for more people to use AI tools without hassle. Whether you’re at home or on the go, AI is now at your fingertips.

For the developers among us, the integration of GPT-4o into the API opens up a world of possibilities. Imagine building applications that can understand and generate large chunks of text, analyze data, or even interact with users in a conversational manner. The future of app development just got a lot more exciting.

And let’s not forget the impact on education and problem-solving. With the ability to share videos, screenshots, and documents, students and professionals alike can get help with complex problems, whether it’s a tricky math equation or a coding challenge. GPT-4o is not just a chatbot; it’s a learning and problem-solving companion, the assistant we were really looking for, if you ask me.

On the OpenAI GPT-4o announcement page, you will also see some clips of the new model in action and that will give you a more detailed picture on what the new version can really do.

How do I get GPT-4o?

OpenAI says that GPT-4o will be available for free and we have been able to select it from the models in the desktop browser version. For text prompts, the new model will exceed the GPT-4 Turbo capabilities. We’ve played with GPT-4o a bit and from what we can tell, it’s faster and smarter within interactions. However, we couldn’t use the voice interaction option yet.

As for availability, GPT-4o has started rolling out:

GPT-4o’s text and image capabilities are starting to roll out today in ChatGPT. We are making GPT-4o available in the free tier, and to Plus users with up to 5x higher message limits. We’ll roll out a new version of Voice Mode with GPT-4o in alpha within ChatGPT Plus in the coming weeks.

OpenAI announcement

So, if you have the ChatGPT app installed on your mobile device, stay put for the update.

Also, according to OpenAI, if you’re a developer you can access GPT-4o in the API as a text and vision model. However, they will launch the support for GPT-4o’s new audio and video capabilities to a small group of trusted partners in the API in the coming weeks.

What about a Windows 11 ChatGPT desktop app? Well, there’s a bit of a pickle here because they did launch the app for macOS. Will we need to wait for GPT-4o to be integrated into Copilot?

What do you think about OpenAI’s new GPT-4o model? If you got to use it, tell us about your experience in the comments below.

User forum

0 messages