Microsoft's Handpose may interpret ASL and control robotic hands in the future

3 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Tracking hand movement is far more difficult than basic skeletal tracking but that’s exactly what researchers at Microsoft are accomplishing. The system is called Handpose and could revolutionize communication, emergency safety, video games, and more. Microsoft has a blog post by Allison Linn explaining its development and potential uses.

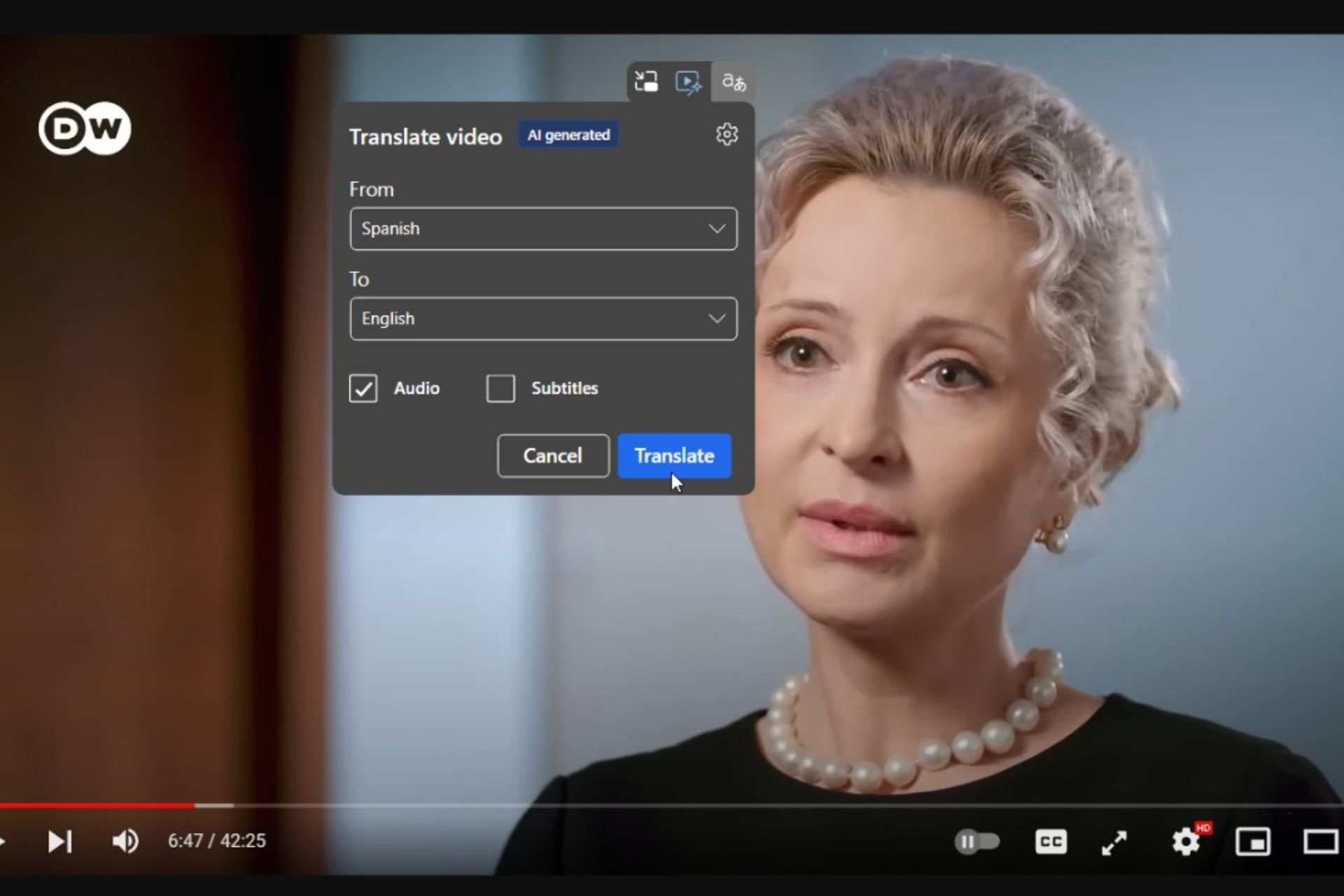

I admit a personal interest in this topic. Some of my best friends are part of the signing community and the idea that a device could translate American Sign Language in real time would change people’s worlds. Handpose is not there yet but the technology could be there some day.

Another use for Handpose is controlling a robotic hand remotely. In a video in their blog post researcher Jonathan Taylor explains how it could be used in dangerous situations where it would be unsafe for rescuers to go.

Handpose allows more realistic use for hand tracking. Linn’s blog post explains that “Handpose uses a camera to track a person’s hand movements. The system is different from previous hand-tracking technology in that it has been designed to accommodate much more flexible setups. That lets the user do things like get up and move around a room while the camera follows everything from zig-zag motions to thumbs-up signs, in real time.”

One more use discussed in Taylor’s video is browsing through pages in a way similar to what’s seen in ‘Minority Report.’ The Kinect brought in some hand gestures and swipe controls but Handpose allows things as specific as finger point.

This technology extends beyond real world application. Having virtually controlled hands would add a new dimension to video games and virtual reality.

Creating such a system is extremely complicated even compared to the Kinect’s body tracking. In comparing the two Taylor explained that “Tracking the hand, and articulation of the hand, is a much harder problem.”

Hands are a complicated thing. With my experience studying American Sign Language I can say from firsthand experience that hands can move quickly, subtly, and gestures and movements are easy to confuse. Handpose has to fight this because as Linn explains “we can move them in subtle and complex ways, which can result in fingers that are hidden from the camera. Even fingers that can be seen are difficult to differentiate from each other.”

Researchers develop Handpose using a combination of 3-D hand modelling and machine learning to understand hand movements.