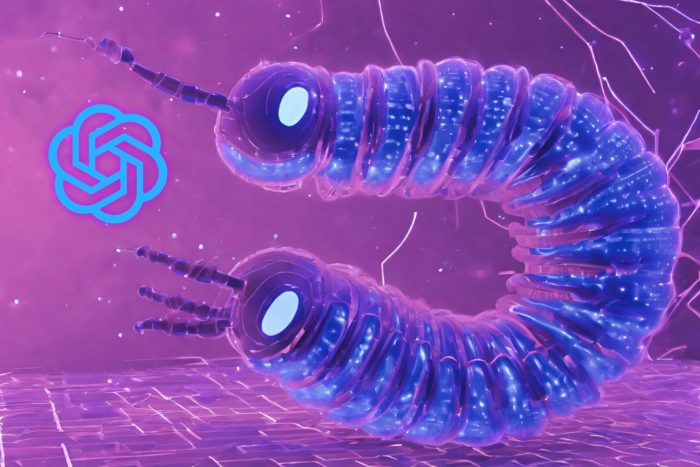

Scientists created an AI worm that can steal your data

Researchers are testing chatbot and AI system vulnerabilities with cyberattacks

2 min. read

Updated on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

OpenAI scientists created a new AI worm, Moris II, that can steal your data and break security measures. Fortunately, with its help, they can discover new security breaches. We are somehow glad that they are the ones to create it first. Furthermore, they will continue experimenting with the AI worms to improve their security system.

How do the AI worms work?

Researchers use the AI worms in mails and send them from one to another. So, once you get one of them and reply to a mail, the user who sent it to you will also receive your email and phone number. In addition, they can steal more data depending on their task.

Additionally, there are two ways to use it. You can send it as text or a question in an image file. On top of that, researchers developed a system that allows them to use AI to send and receive mail.

The problem with the AI worm is that threat actors can use it to steal more than just your email and phone number. It could, in fact, steal accurate data if you reply to an email that contains it. In addition, if you use AI to reply automatically, that could lead to further issues.

The AI worm can also perform other tasks. For example, according to Wired, they could flood your email with spam, generate toxic content, and distribute propaganda. Furthermore, it can also infect new people by replying to their emails.

Ultimately, by testing with various exploits similar to this AI worm, scientists from OpenAI could improve their protection system. After all, this AI experiment exposes prompt-injection-type vulnerabilities, and researchers could patch them. Hopefully, it will stay in their system. Otherwise, someone else could cause some damage, especially kids with a grudge.

By the way, here’s what the prompt looks like:

What do you think? Is exploiting AI worms a good way to test the security? Let us know in the comments.