The biggest Russian tech brand improves the LLM training to use 20% less GPU power

The LLM is free, ant it could save Microsoft and OpenAI millions of dollars

3 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

In a world where tech giants are always seeking methods to simplify their operations and reduce expenses, Yandex, the Russian tech behemoth, has thrown a lifeline that could save millions. They’ve revealed an innovative open-source tool named YaFSDP, which aims at enhancing the efficiency of training large language models (LLMs).

YaFSDP, which lowers the GPU resources required for training by a significant amount, shines like a saving and efficient light among the technological expenses.

YaFSDP is about effectiveness and reducing expenses, it assures using 20% less GPU. This claim is significant, especially when we think about the large size of companies such as Microsoft, Google and Facebook.

Picture how much difference this could make to their final results. And this is not only about saving money, it also makes things faster. The time needed for training gets reduced as much as 26% compared to previous methods – it’s like having a weapon that can cut down on both costs and time for these businesses.

How does YaFSDP bring those high improvements?

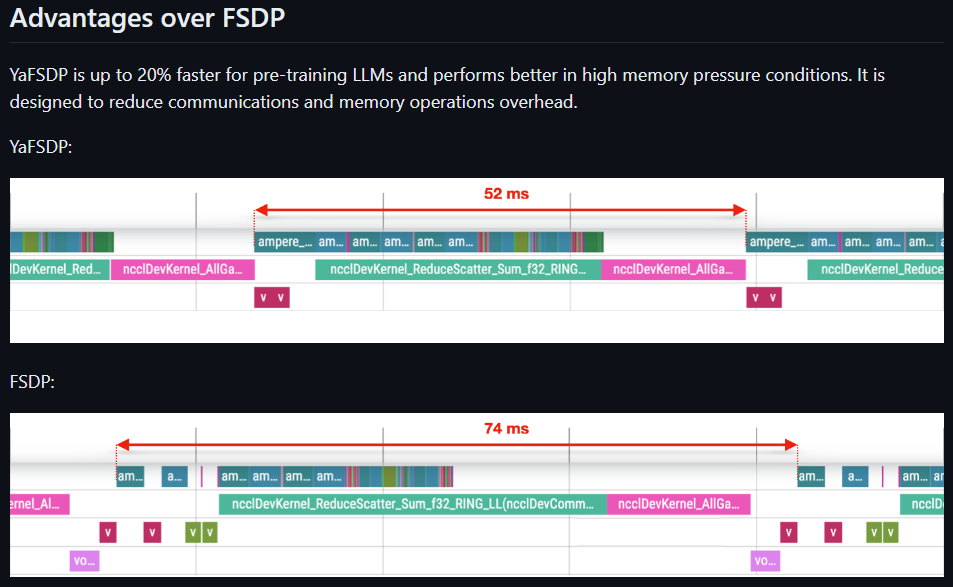

YaFSDP solves the issue by improving GPU communication and lowering memory usage in the training process. This signifies that AI models are trained quicker and with less expense, while still maintaining high quality standards. It feels as though Yandex has discovered a method to make the machines function more intelligently, not more strenuously.

In the Yandex press release, they also emphasize the potential savings that the new LLM can bring. If you train a model with 70 billion parameters, it can save the resources of approximately 150 GPUs. This indicates an astonishing monthly saving range from $0.5 to $1.5 million according to which virtual GPU provider is used.

However, the real bombshell is that YaFSDP is open source and the Russians have also dropped it on GitHub, so anyone can download and try it out. They have also a detailed paper about the tool (EN -Google Translate) if you want to study their method further.

As a sidenote, the developers show a comparison table with LLama 2 and LLama 3 LLMs using a A100 80G GPU cluster. The LLama LLMs are certainly powerful, but we don’t know if the results are that relevant without a complete pool of training models.

We will surely see a lot of developments in AI training, but right now, this is highly beneficial for large companies that handle training and processing.

What do you think about the new Yandex YaFSDP and training method? Let us know in the comments below.

User forum

0 messages