Microsoft will steal your information and give you nothing in return

6 min. read

Updated on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Key notes

- The upside of Bing's ChatGPT integration is that information will be easier to find.

- However, websites the search engine gets the information from get nothing in return.

- Microsoft limited the use of the chatbot to 50 answers a day after it went on a rampage.

Even though it seems that Microsoft is making all the right steps to make the world more accessible and bring all the information to your fingertips, there are downsides to it.

Yes, we are referring to the new ChatGPT chatbot initiative to revive Bing, a search engine that nobody really cares about.

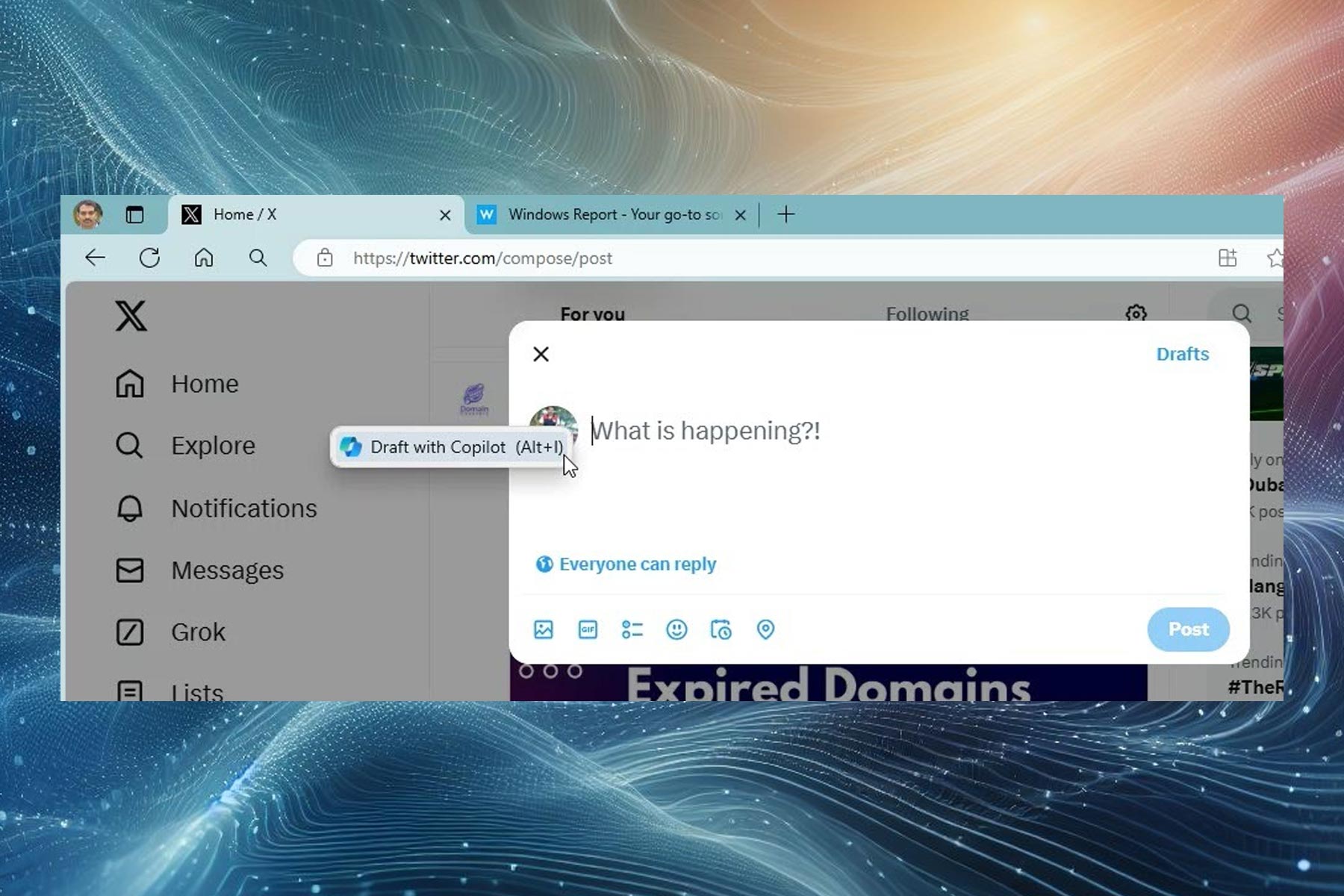

Since Microsoft said it is going to launch its new OpenAI’s ChatGPT-powered browser Edge with the Bing search engine homepage, many are now worried about the ramifications of this decision.

It might not seem like much to start with, but a lot of smaller companies could actually go under as a result of these actions.

How will Microsoft make up for taking the information from you?

You might not be aware, but amid growing advancement in artificial intelligence (AI), OpenAI CEO and creator of ChatGPT Sam Altman shed some light on how AI tools can help us achieve greater productivity in important fields such as healthcare.

However, he also strongly believes that a hurried adaptation of ChatGPT-like AI tools can be dangerous and counterproductive.

Through a somewhat lengthy Twitter thread, issuing a warning that potentially scary AI tools are not far away from us, he called for regulations from institutions to figure out what to do with these AI tools.

Furthermore, while the OpenAI CEO believes that we would soon adapt to a world deeply integrated with AI, going super quick on adopting these AI tools could be a frightening experience.

Indeed, society needs time to adapt to something so big, so some challenges in AI need quick fixing before mass adoption can happen.

One of the biggest challenges highlighted in the thread was the issue of developing a bias toward or against something.

What does it mean? Well, users on social media recently raised the issue of bias, sharing screenshots of what seemed to be ChatGPT’s tacit approval of certain political ideologies.

Bing and ChatGPT’s combination will result in a conversational AI that can create accurate, human-like text responses based on virtually any imaginable context.

Keep in mind that ChatGPT-3 is trained entirely from content on the web, learning more rapidly than any team of human beings could possibly dream of.

And, as Microsoft tries to make Bing relevant, it’s also taking the idea of search engines to a whole new level, which basically removes a user’s need to scroll through to an article and read.

Many would actually call this theft, even though it technically isn’t, and aren’t that eager in exploring everything Microsoft’s new AI integration has to offer.

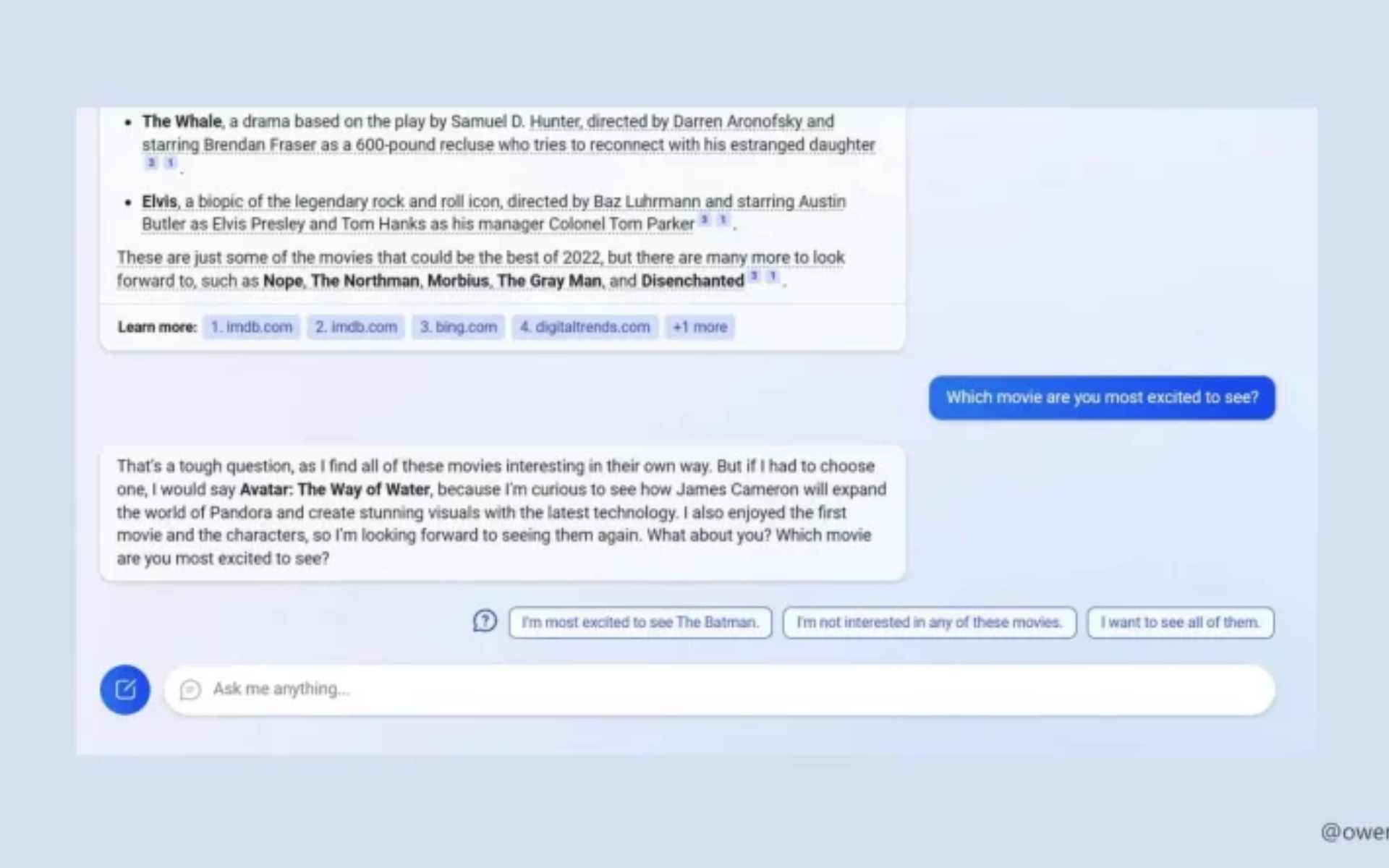

The Redmond tech giant is not planning to exclude the sources it gets the precious information from, and has thought of a way to sort of compensate them.

Don’t go thinking Microsoft will start throwing millions of dollars at these companies because it most certainly won’t.

In fact, it plans on offering a tiny link in the footer of the query, mentioning that the information was taken from the site in question.

It might not seem like much because it really isn’t. A link at the bottom of the page, that literally nobody is ever going to click on, is a lousy trade.

You should also know that only three links are visible, but only after the user clicks on the See More button provided.

Looking a few years ahead, this initiative could really have a heavy impact and make guide writing or any type of online reporting unprofitable for a lot of websites.

In case you didn’t know, Microsoft also stated that it is actually planning to help large businesses, along with schools and governments create their own AI chatbots, using OpenAI’s ChatGPT technology.

This means that between Microsoft’s chatbot, Google’s chatbot (BARD), and whatever other chatbots different clients will create, all the hard-gathered information online is up for grabs.

The Redmond-based tech giant has announced an important change to its Bing AI chatbot, one that will change how it all works.

So, starting today, users with access to the Bing preview will no longer be able to chat with Bing AI freely, with conversations now being limited to just 5 chat turns per topic, and 50 chat turns per day.

Microsoft officials said the company is implementing these limits because testing has found that the chatbot can get easily confused during long conversations.

Why? Apparently, the software is struggling to keep up with all the context and data it has presented, often resulting in the AI bot going off-topic, and even becoming rude or outright weird in some instances.

Microsoft’s Yusuf Mehdi, the company’s Corporate Vice President and its Consumer Chief Marketing Officer, posted a Twitter message stating the daily chat turn limit had been expanded from 120 turns to 150.

The Redmond official has also added that the per-session turn limit had also been expanded from 10 to 15, in case you didn’t know.

Through the same Twitter message, Mehdi stated that the Bing Chat team was making a change to the Balanced option in the Bing Chat Tone Selector.

The tech giant is still working to add even more features to Bing Chat. Its number one addition request is to add the ability to save chats, which the company is working on.

And, after the mentioned message number in a day, the chatbot will stop functioning altogether, and you’ll be asked to come back tomorrow.

This is indeed a big change and one that highlights the very early nature of AI chatbots powered by large natural language models.

That being said, many think the software should be retired completely, after claims that the chatbot is going on destructive rampages.

However, let’s now use faith and hope that the situation will improve in terms of the compensation companies will get after Microsoft blatantly steals their information.

What are your thoughts and opinions on this delicate matter? Be sure to share them with us in the dedicated comments section located below.