Hyperreal video calls that allow for eye contact between virtual participants might be coming to Teams

The technology would make Teams meetings more immersive.

3 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Microsoft is working on a technology that enables hyperreal video calls that allow for eye contact between virtual participants, according to a recent patent filed by the Redmond-based tech giant, and published recently.

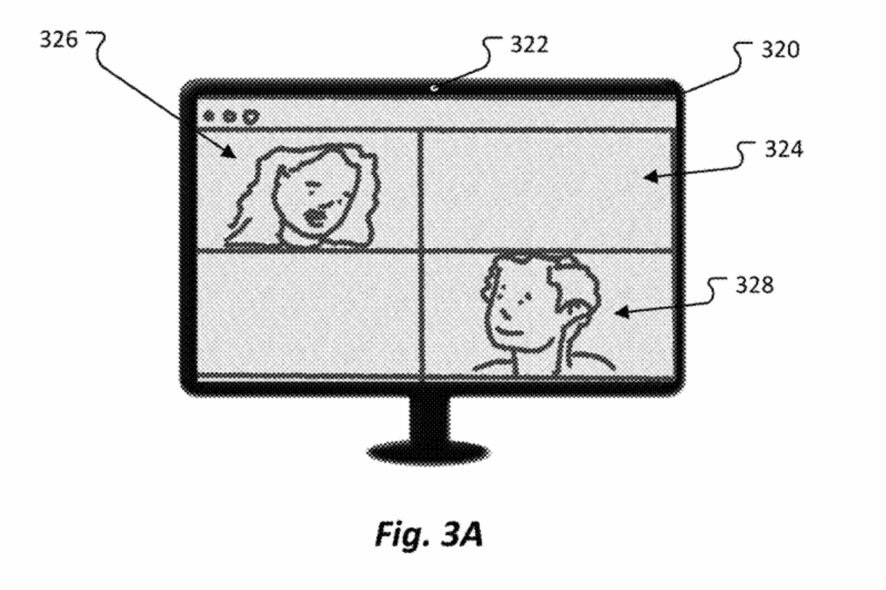

The patent describes a technology that automatically adjusts the gaze of participants in a video conference, on platforms such as Microsoft Teams, or Skype, depending on the position of the active participant.

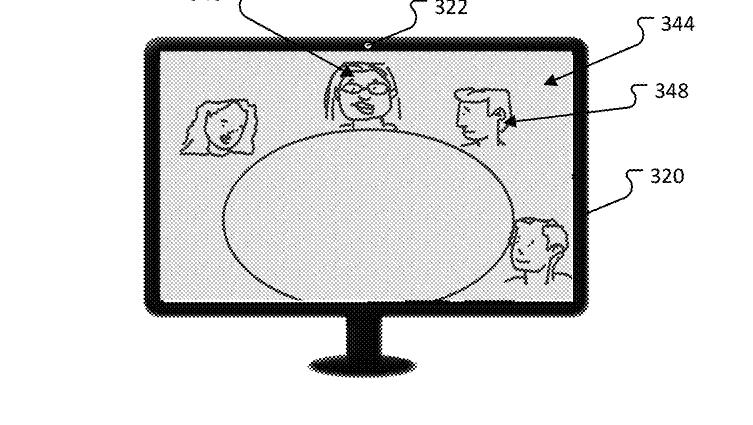

The technology uses an eye gaze tracker that would track the gaze of each participant in the meeting. Then, it would automatically adjust the position to create a sense of virtual immersion, as you can see in the illustration above. Here’s what the patent says:

More specifically, an eye gaze tracker may be used to determine a location at which the eye gaze of a participant is directed. If the eye gaze is directed to another participant taking part in the video conference, then the eyes, head pose, and/or upper body portion of the participant may be modified such that the eye gaze of the participant as displayed in the video conferencing application appears to others to be directed towards the previously identified other participant.

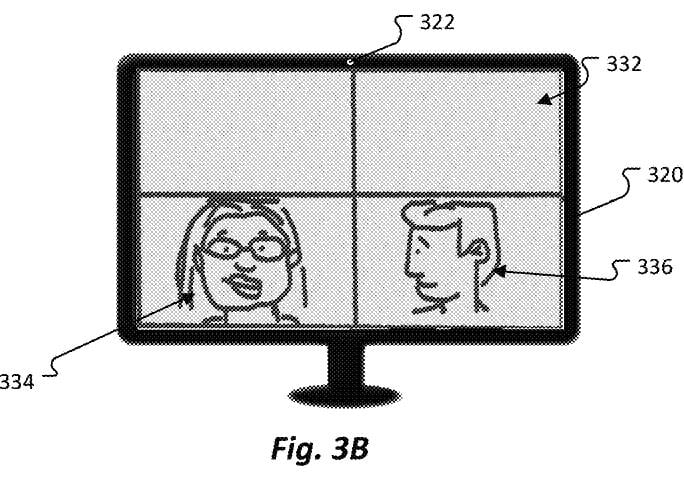

The patent also showcases a scenario where the participants can engage in eye contact interactions in a virtual background, giving a hyperreal impression to the entire virtual meeting. Below you can see the illustration for this scenario.

How does this technology work?

- The system receives information about how to adjust the images in a video stream of a participant.

- It identifies where this participant’s images are displayed in the video conference layout.

- Based on this information, it figures out where another participant’s images are displayed.

- The system then calculates the direction of the first participant’s gaze based on the location of the second participant’s images.

- It creates new images where the first participant appears to be looking at the second participant.

- These new images replace the original ones in the video stream.

In other words, it’s a system that makes it look like the active participant is making eye contact with someone else during a video call.

In many ways, the technology is similar to the existing Intelliframe feature in Microsoft Teams. The Intelliframe feature uses AI to automatically detect and identify participants in the Teams meetings, but this feature also creates immersion by simultaneously streaming multiple perspectives of the meeting.

Microsoft added Intelliframe to Teams to create a more realistic virtual meeting. Remote participants will also be able to stream various IntelliFrame videos at once. They get to see a panoramic view of the meeting, as well as a central view of the person who’s currently speaking in the meeting.

However, the technology described above takes the IntelliFrame feature to another level, as it artificially creates immersion by manipulating images into a single frame.

Could this become a feature in Microsoft Teams? Well, it most likely will, as Microsoft has already started including more immersive features to the platform. Recently, the Redmond-based tech giant introduced Microsoft Mesh for Teams, which allows developers to build their own virtual spaces where participants to a conference can go, although in their avatar form.

But virtual spaces are not the same as the real thing, and this feature specifically could make Teams meetings far more enjoyable and engaging for everyone.

One can hope, though.