Microsoft AI CEO, Mustafa Suleyman, says everything you post on the Internet should be used to train AI

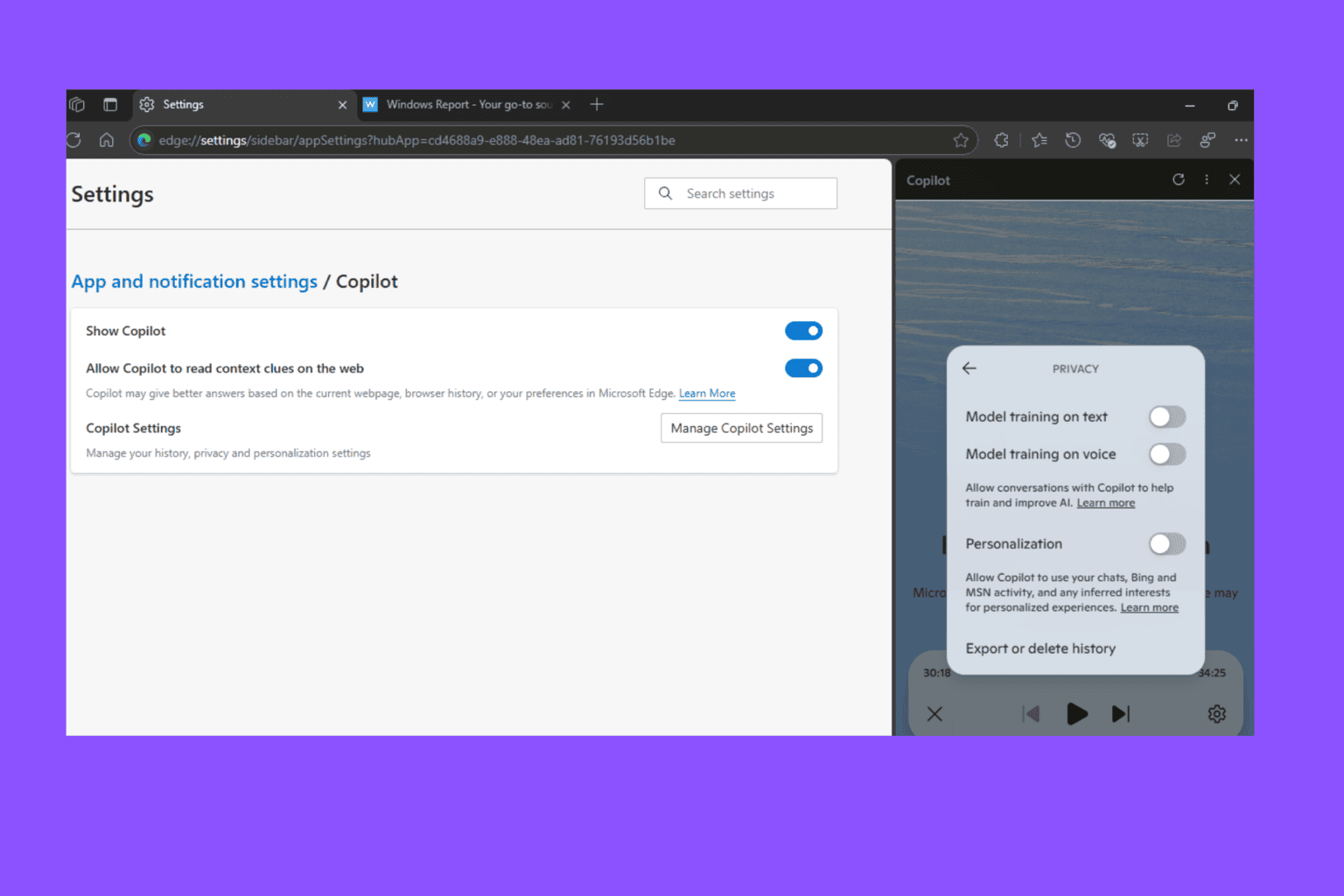

Microsoft has been sued by creators, news papers and other organizations for using their content to train its Copilot.

3 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

Mustafa Suleyman, who serves as Microsoft AI’s CEO, confidently states that almost everything found on the web can be used for AI training, a viewpoint that is causing waves in the digital world. This declaration points to a sensitive equilibrium between the internet’s openness and the rights of those who create content, creating a stage for intricate legal and moral talks.

NBC News interviewed him at the Aspen Ideas Festival, where the Microsoft AI CEO was present, where he said:

With respect to content that’s already on the open web, the social contract of that content since the 90s has been that it is fair use. Anyone can copy it, recreate with it, reproduce with it. That has been “freeware,” that’s been the understanding.

There’s a separate category where a website, a publisher, or a news organization has explicitly said do not crawl or scrape me for any other reason than indexing me so that other people can find this content. That’s a grey area, and I think it’s going to work its way through the courts.

Mustafa Suleyman

Picture yourself as someone who creates, investing all your passion and dedication into producing something, only to discover it being utilized for training AI without any agreement or payment. Numerous individuals are dealing with this situation, wherein firms such as Microsoft assert that the internet’s content is essentially free for AI’s learning objectives by default.

Comments from Suleyman at the Aspen Ideas Festival propose a historical “social contract” of the Internet in which content is recognized as “freeware.” But can we say that this is a fair presumption? Aren’t we ignoring the rights of people who create the content?

The dispute is not only theoretical; lawsuits are being filed against big companies such as Microsoft. Different content creators, from newspapers to single authors, assert that their work is unlawfully utilized to train AI models like Copilot and ChatGPT. These legal conflicts show a rising worry: the possible misuse of intellectual property in the AI era.

Suleyman’s viewpoint might appear practical in the context of AI advancement, but it raises important questions about where content creation is headed. If everything on the internet is free for AI training, why would creators continue generating their original work? There’s a concern that this could lead to a future where creating content is no longer seen as valuable, affecting the variety and quality of what we find online.

Additionally, Suleyman forecasts when AI could make the cost of creating new knowledge almost zero. This idea of having an open-source and globally available information base seems idealistic but also threatens creators’ livelihoods. The difficulty is to maintain an equilibrium that encourages inventiveness without harming the privileges and benefits for content makers.

In these uncertain times, it is obvious that the chat about AI and copyright law is still ongoing. The older legal and moral systems might not be enough to handle the new intricacies AI brings.

So, what is the limit? How can we defend people’s rights while using AI’s huge possibilities? These are important questions that need our focus!

User forum

0 messages