Microsoft's Project Rumi AI can interpret your expressions

Project Rumi is capable of human-like responses.

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Key notes

- Project Rumi integrates your physical expressions to form an opinion on your inputs.

- The AI language will respond to you according to your attitude.

- Project Rumi is an AI breakthrough, as it allows for AI models to be human-like.

Microsoft has invested a lot of resources in AI research over the past months: LongMem, which offers unlimited context length, and Kosmos-2, which visualizes spacial concepts and it comes with its own input about them. Then you have Orca 13B, which is open-source for you to train your AI models.

There is also phi-1, which is very capable of learning complex blocks of Python. And Microsoft has even supported the research on creative AI, such as DeepRapper, which is, yes, you guessed it, an AI rapper.

The Redmond-based tech giant is ahead of the curve when it comes to AI. It recently announced a partnership with Meta, to develop Llama 2, which is a LLM that has 70 billion parameters, some of the most so far.

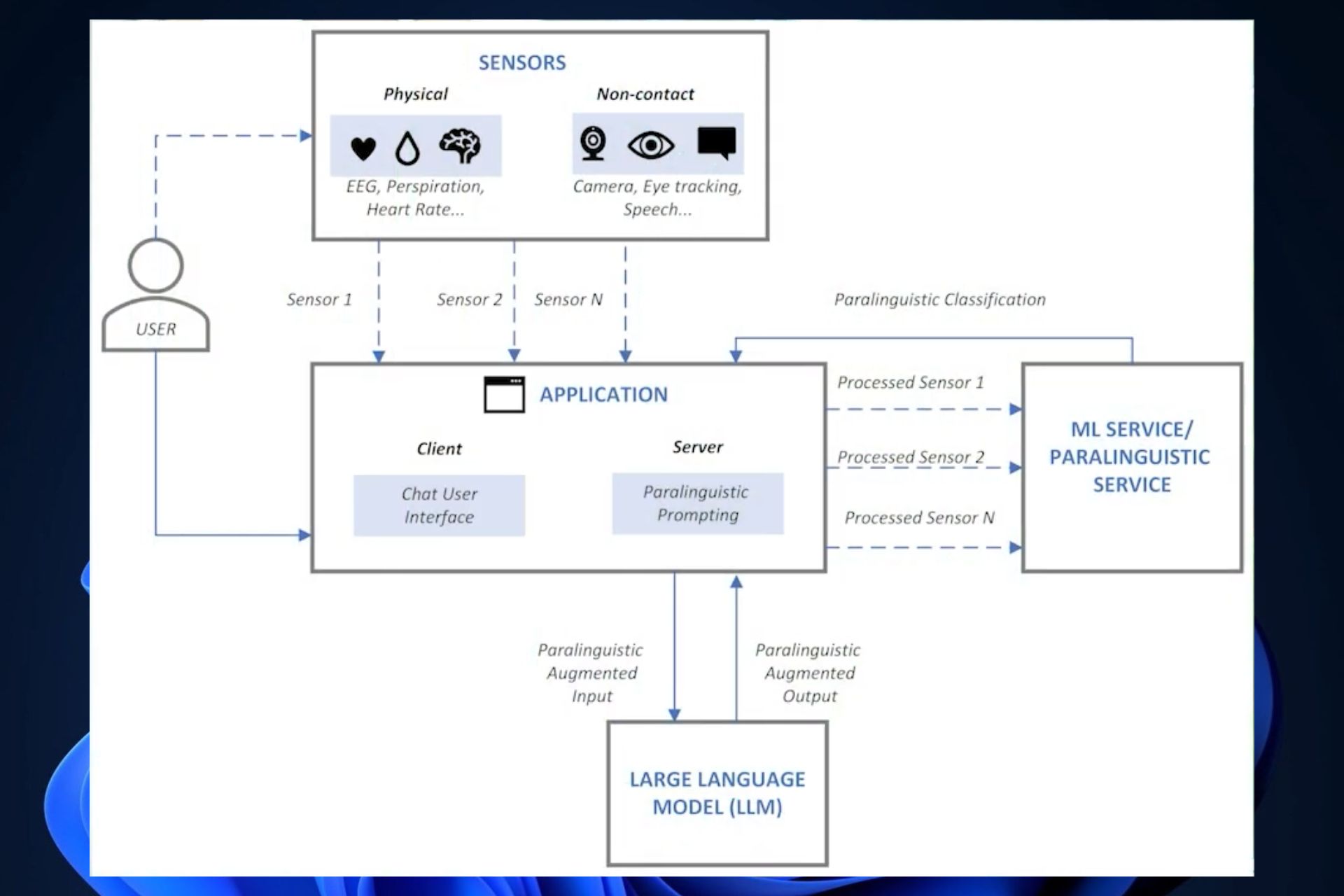

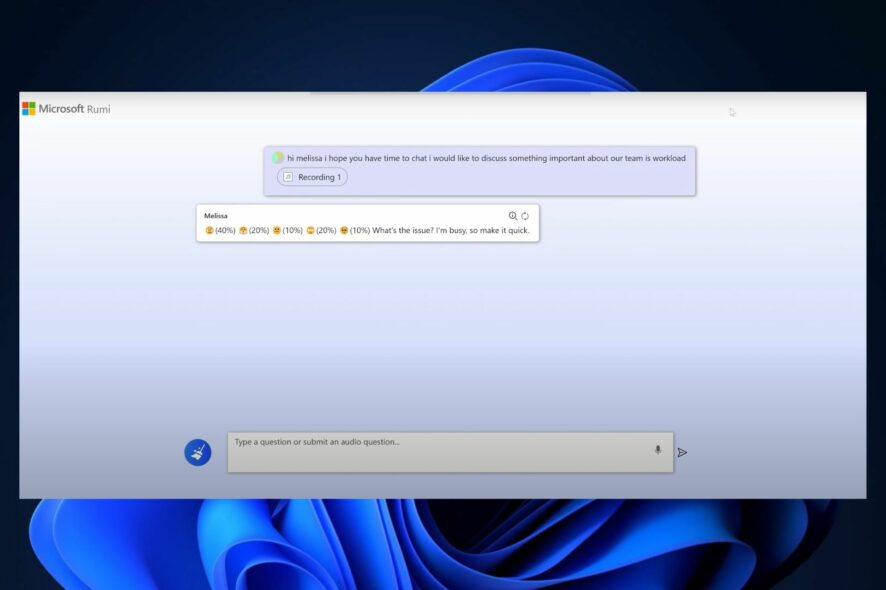

And now, it seems Microsoft is also investing in Project Rumi, an AI model which is capable of incorporating paralinguistic input in its interactions. This is a breakthrough in AI, and it will bring other models closer to achieving a human-like response system.

What is Microsoft Project Rumi?

Microsoft Project Rumi is a large language model capable of integrating all of your physical expressions to form an opinion on your attitude and then respond to you accordingly. This means that if you come off as angry, the model will actually read your facial expression, and it will listen to your voice tone.

And then it will form an answer that goes according to your attitude.

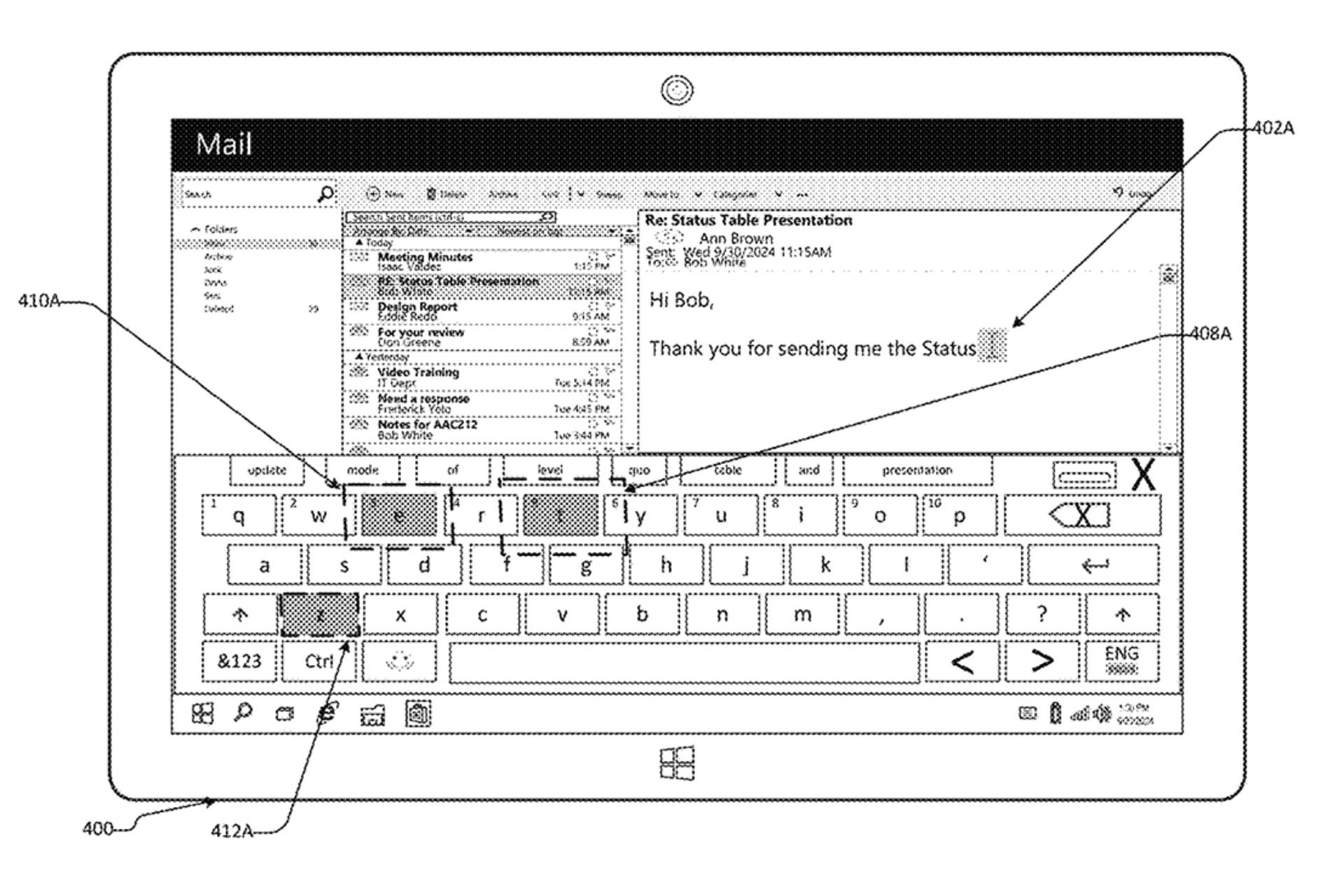

Project Rumi does this by accessing your microphone and web camera and it will actually record your face to make sense of physical expressions.

Project Rumi is patented by Microsoft in an attempt to address the current AI models’ limitations when it comes to their inputs. For example, Bing AI cannot see your facial expressions and it cannot hear your voice tone when you’re asking it to do something. As a consequence, its answer will be somewhat artificial, and non-human.

Microsoft Project Rumi comes and addresses these limitations by making use of existing technical options to capture your human expressions. In return, Project Rumi learns human expressions and builds its behavior based on them.

As Project Rumi is an LLM, the model will be used to train other AI models. So, you’ll soon be able to interact with a human-like AI. Does it sound cool or not? What do you think?