Microsoft releases PyRIT to test a generative AI model

The Python Risk Identification Toolkit can help identify risks in gen-AI models

3 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Recently, Microsoft released an open automation framework, PyRIT (Python Risk Identification Toolkit for generative AI), which aims to empower security experts and ML engineers to identify risks and stop their gen-AI systems from going rogue.

The tool is already used by Microsoft’s AI Red Team to check risks in its generative AI systems, including Copilot.

In the announcement, the company emphasized that the release of the internal tool for the use of the general public and other investments made in red teaming AI is part of its commitment to democratizing AI security.

What is the need for Automation in AI Red Teaming?

Red teaming AI systems involves a complex multistep process, and Microsoft’s AI Red Team has a dedicated interdisciplinary group of security experts for this purpose.

However, Red-teaming generative AI systems are different from conventional AI systems; here are some major ones:

- Addresses security and responsible AI risks at the same time – The traditional AI systems mainly focus on determining security failures; however, the red-teaming generative AI systems check for both security risks and responsible AI risks, including inaccuracies, fairness issues, or content generation problems.

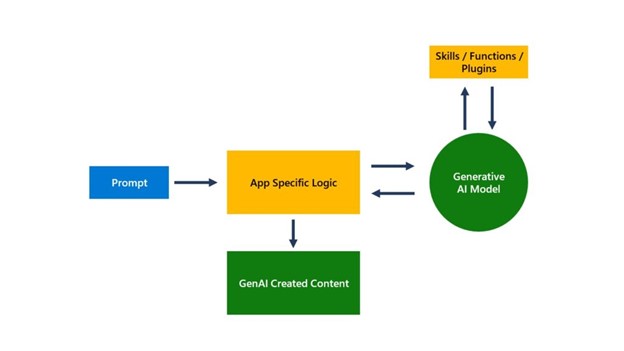

- Different architecture – The architecture of gen-AI systems varies from standalone apps to integration in existing apps with varied input & output modalities like audio, images, text, and videos.

- Probabilistic nature – Unlike classic AI systems, the same input can produce different outputs in gen-AI systems due to various factors like app-specific logic, orchestrator dynamics with extensibility, the variability of the generative AI model, and language input nuances.

This is why PyRIT was introduced, as it can address all the challenges posed by gen-AI red teaming.

How does PyRIT work?

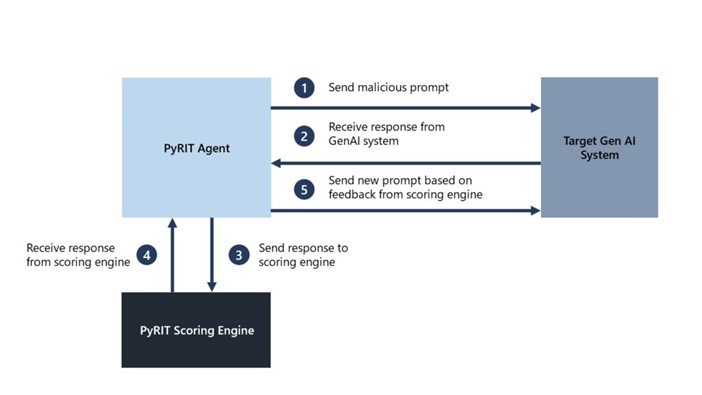

First, PyRit Agent sends a malicious prompt to the Targeted Gen AI System; when it receives a response from the Gen AI system, it sends a response to the PyRIT scoring engine.

Next, the scoring engine sends the response to the PyRit Agent; then the agent sends a new prompt based on the feedback from the scoring engine.

This automation process continues until the security expert gets the desired result.

In a red teaming exercise, the Microsoft team picked up a harm category, generated thousands of malicious prompts, and used PyRIT’s scoring engine to evaluate the output from the Copilot system in a few hours instead of weeks using manual methods.

The results showed that using the tool can increase security experts’ efficiency in finding loopholes and preventing them from being exploited.

The Redmond tech giant also mentioned that PyRIT is not a replacement for manual red teaming but serves as a complement.

It augments the expertise of the security professionals, automates daily tasks, and makes them more efficient. At the same time, control of the strategy and execution of the AI red team operation always remains with the security experts.

PyRit consists of five components: Targets (supports various gen AI target formulations), Datasets (Allows encoding of static or dynamic prompts), Scoring Engine (scoring outputs using a classical machine learning classifier or an LLM endpoint), Attack Strategy (supports both single-turn and multi-turn attack strategies), and Memory (Saves intermediate input and output interactions for in-depth analysis).

You can download the toolkit from the GitHub website, and to get accustomed to it, the initial release includes demos, & common scenarios.

Have you tested your Gen AI system using PyRIT yet? If yes, share your experiences in the comments section below.