Microsoft's latest patent reveals technology capable of stopping AI from hallucinating

Microsoft announced it was going to tackle the issue, earlier this year.

3 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

One of AI’s biggest challenges today is its hallucinating capabilities, aka fabricating false information just to complete a task. While most AI models on the market will use the Internet to respond as accurately as possible, if the conversation is long enough, the AI will sometimes use unfounded information to provide answers.

While Microsoft started working on a tool to mitigate AI hallucinations earlier this year, the Redmond tech giant has also published a paper describing a patented technology that could effectively prevent hallucinations from happening in the first place.

The paper “Interacting with a language model using external Knowledge and Feedback“ describes how this would happen.

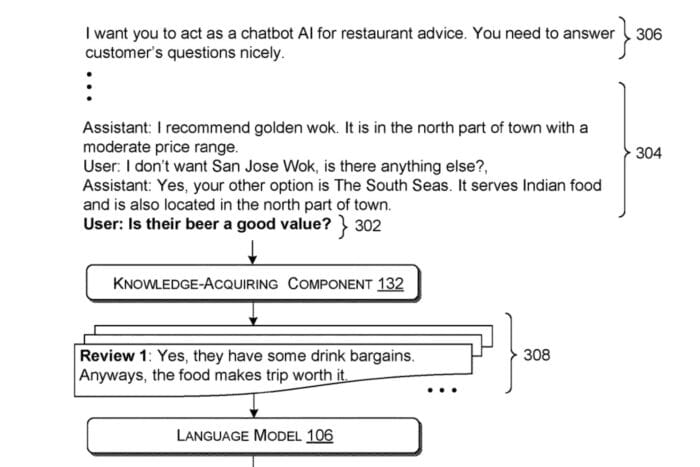

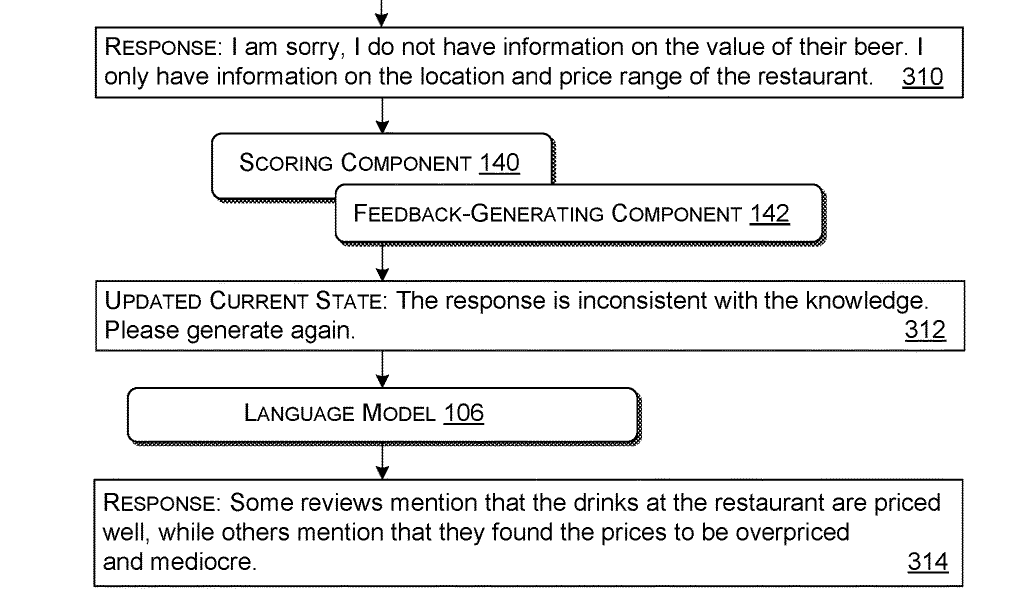

A system would receive a query—a question or request (query) sent to it. Based on that query, it would retrieve knowledge by finding relevant information from external sources. Then, it would generate the original model input information by combining the query and the retrieved data into a single package that the language model can use.

The combined package is presented to the language model, which is trained to generate responses based on input. The AI language model generates an initial response based on the input package.

The system uses a predefined test or criteria to evaluate the usefulness or relevance of the original response and determine if the initial response meets the usefulness criteria. If the initial response isn’t valuable enough (AI hallucinations), the system generates revised input information, including feedback on missing or incorrect information.

This revised package is again presented to the language model, and the language model generates a new response based on the revised input.

This technology would not only help combat AI hallucinations, but it has many practical applications. For example, as shown by the paper, this system can be applied to an AI assistant in a restaurant, where it can provide factual and verified info to customers and even come up with solutions after the input (in this case, the customers’ requests) is changed mid-conversation.

This process ensures that the language model’s responses are as accurate and helpful as possible by iteratively refining the input and feedback.

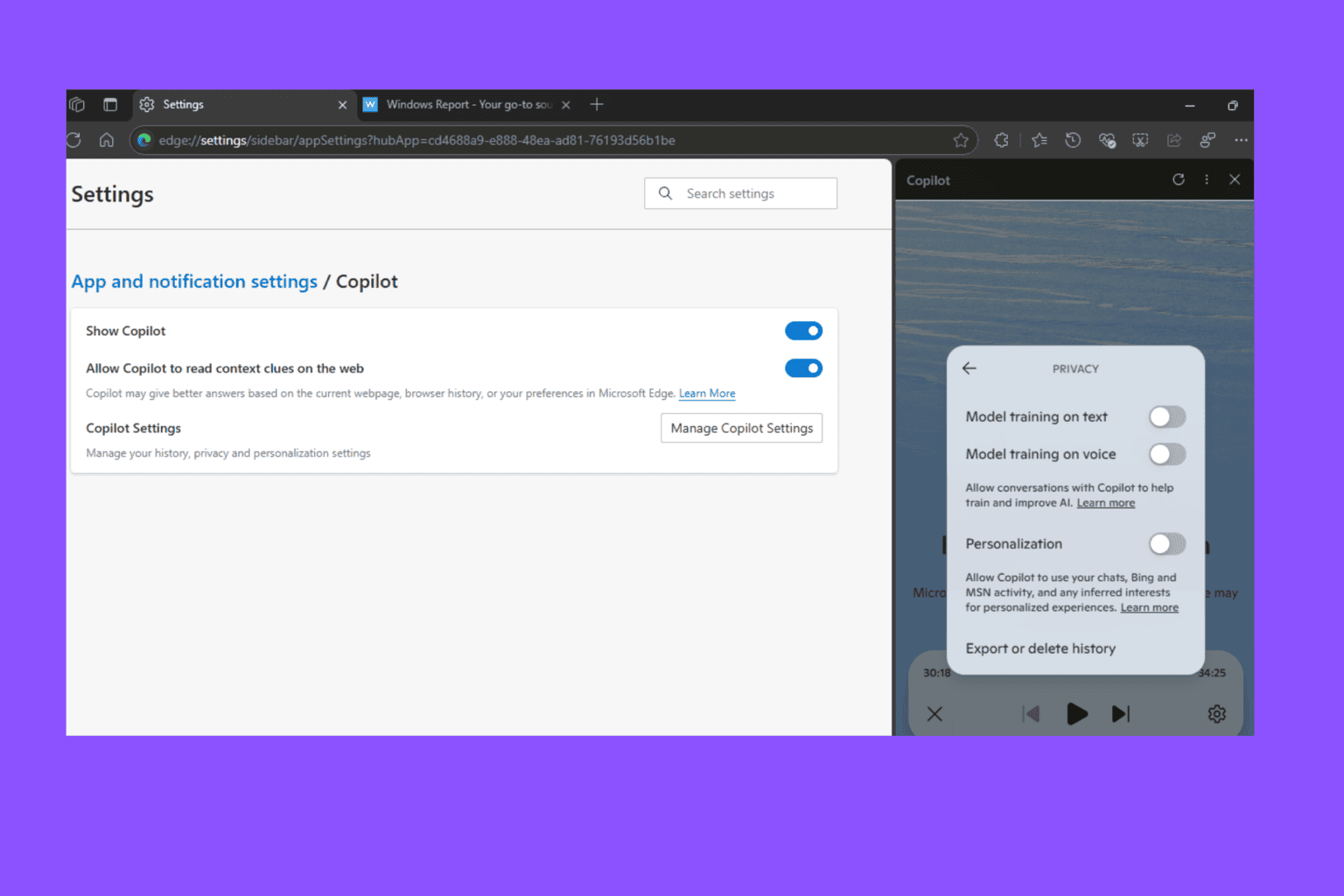

Even if it’s still just a patent, the technology described here clearly indicates that Microsoft intends to make its AI models (Copilot) fully functional and correct.

While the Redmond-based tech giant already announced that it wants to remediate and eliminate hallucinations in the AI model, this technology also proposes a case where AI could provide accurate and verified information in roles such as a restaurant assistant.

An AI model could be trained to provide answers without rooting them in false information by erasing hallucinations. Coupled with the voice capabilities of the new Copilot, AI could be used to replace the most basic jobs, such as automatic customer support.

What’s your take on this?

You can read the whole paper here.

User forum

0 messages