Microsoft's new Phi-3 LLM is small and trained with bedtime stories but it still packs a punch

Phi-3 Mini is so small that it could run on a smartphone

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

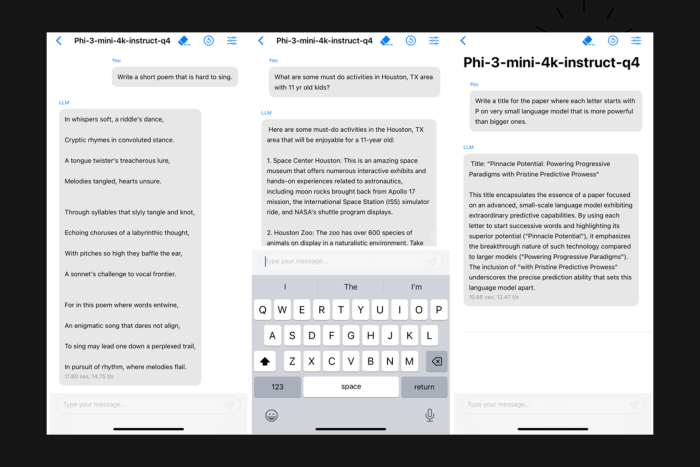

Microsoft released its latest artificial intelligence model, Phi-3 Mini and if you’re a tech connoisseur, you can jump straight to the technical report. Phi-3 Mini is Microsoft’s smallest AI model yet, but don’t let its size fool you. It packs a punch with 3.8 billion parameters, making it a powerhouse capable of performing tasks close to models ten times its size. What’s even more fascinating is how this model was trained. Inspired by the simplicity and effectiveness of children’s bedtime stories, developers created a unique curriculum that simplifies complex concepts, making Phi-3 not just smart but also incredibly user-friendly.

Why would Microsoft invest in a small LLM?

Well, it turns out that smaller AI models like Phi-3 Mini are not just cheaper to run; they’re also more efficient on personal devices like phones and laptops that can run it locally. This is a game-changer for smaller organizations or individuals who previously found the cost of using large language models prohibitive. Microsoft’s move to develop Phi-3 Mini and its upcoming siblings, Phi-3 Small and Phi-3 Medium, is a strategic step towards democratizing AI technology.

About competition, Phi-3 Mini is in a league of its own. While other LLMs focus on specific tasks like document summarization or coding assistance, Phi-3 Mini shines with its versatility and performance, rivaling larger models in tasks ranging from math to programming to academic tests. And the best part? It can do all this directly on your smartphone, without needing an internet connection.

Microsoft’s approach to training Phi-3 Mini is nothing short of innovative. By asking another LLM to create children’s books with over 3,000 words, they’ve managed to teach the model in a way that’s both effective and endearing. This method not only enhances the model’s coding and reasoning abilities but also makes it more relatable and easier to interact with.

Phi-3 Mini is now available on platforms like Azure, Hugging Face, and Ollama, making it accessible to a wide range of users eager to explore its capabilities. We look forward to the release of Phi-3 Small and Phi-3 Medium, because that’s what’s coming next. Running a LLM locally on a smartphone sounds very exciting.

What do you think about Microsoft’s Phi-3? Share your thoughts in a comment below.

User forum

0 messages