Opera introduces local LLM support, aiming to become the AI pioneer

Currently, this feature is only available in the Developer version of Opera

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

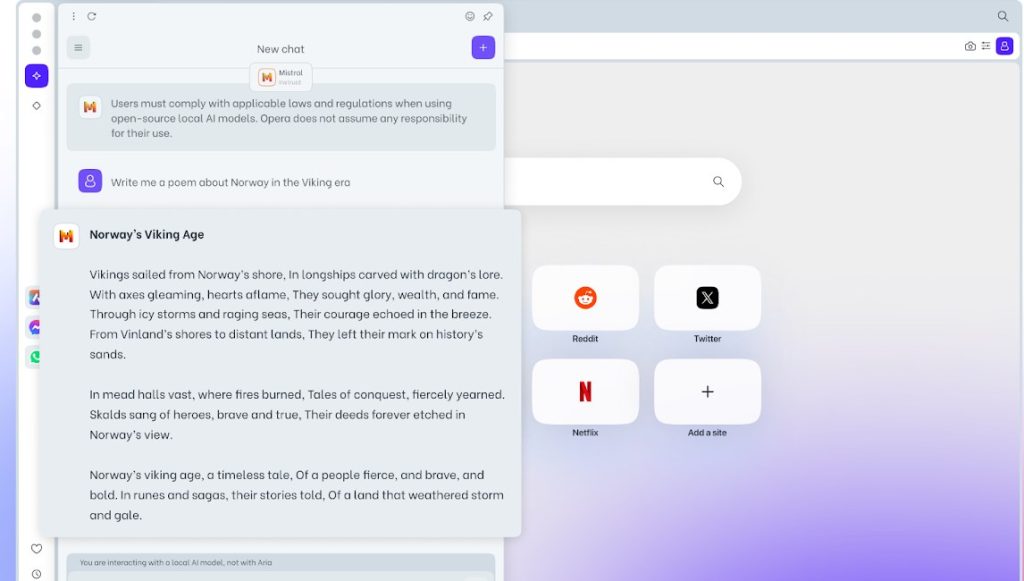

Opera has gone through many changes over the years, and the team behind it is working hard to incorporate artificial intelligence in every aspect of its browser.

Speaking of which, it seems that Opera will allow users to download and use large language models locally in the future.

Opera will allow you to use LLMs locally

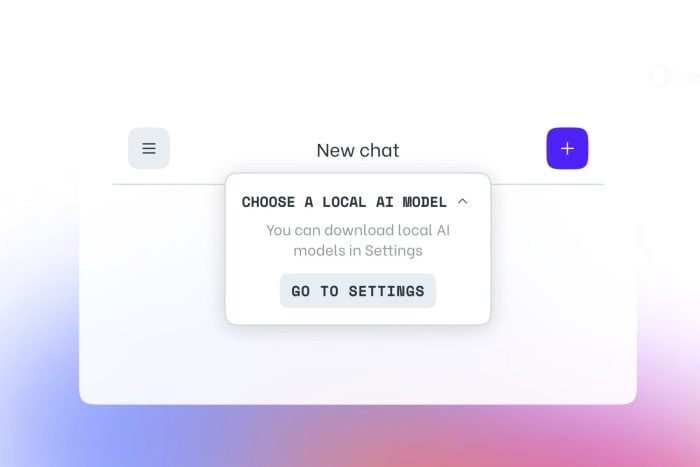

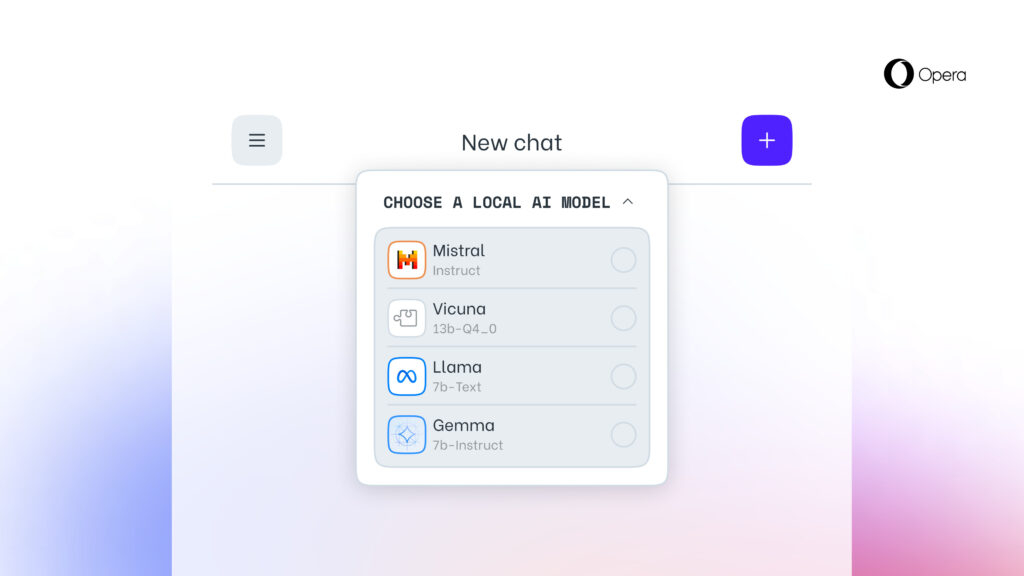

Opera has its AI Featured Drop program that allows users to try out various AI-powered features early. Speaking of AI features, it seems that Opera users will be able to download and use LLMs locally, as TechCrunch writes.

The company is using the Ollama open-source framework in the browser to run these models on your computer, meaning that no data is sent to the servers.

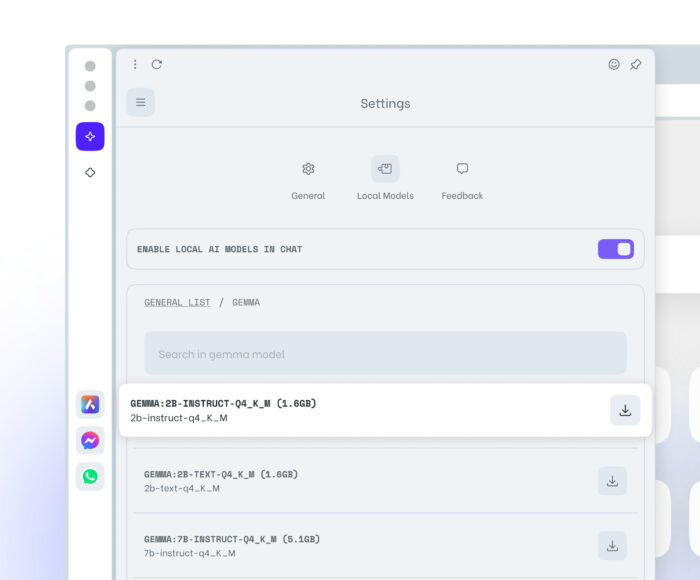

As for available models, you can access Llama, Vicuna, Gemma, Mixtral, and many more. Keep in mind that language models can be up to 10GB in size, so make sure you have enough space before downloading.

Regarding the availability, you can test this feature in the Developer build of Opera, so be sure to give it a try.

If you’re interested in Opera and AI, why not join Opera’s Aria Scavenger Hunt for a chance to win a prize?