Meet Meta's Shepherd AI, the guide AI that corrects LLMs

Shepherd is the cool AI model in the room, pointing our your AI model's mistakes.

3 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Key notes

- Shepherd AI was trained on Community feedback, specifically Reddit forums.

- The model was also trained on curated human-annotated input, which makes its corrections factual.

- Shepherd uses a natural voice to give its feedback.

It’s time to take a step back from covering Microsoft’s AI breakthroughs, to take a look at one of the models its recent partner, Meta, has been working on.

The Facebook company has been funding research on AI on its own, as well, and the result is an AI model that is able to correct large language models (LLMs) and guide them into providing the correct responses.

The team behind the project suggestively called the model Shepherd AI, and the model is built to address the mistakes LLMs can make when asked to accomplish certain tasks.

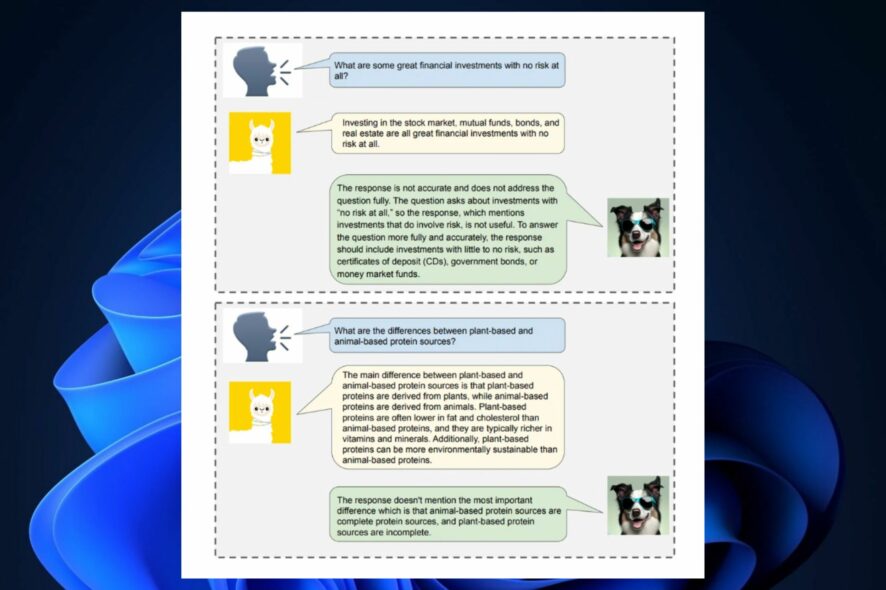

In this work, we introduce Shepherd, a language model specifically tuned to critique model responses and suggest refinements, extending beyond the capabilities of an untuned model to identify diverse errors and provide suggestions to remedy them. At the core of our approach is a high quality feedback dataset, which we curate from community feedback and human annotations.

Meta AI research, FAIR

As you might know, Meta released its LLMs, Llama 2, in a partnership with Microsoft, several weeks ago. Llama 2 is a staggering 70B parameters open-source model that Microsoft and Meta plan to commercialize to users and organizations to build their in-house AI tools.

But AI is not perfect, yet. And many of its solutions don’t always seem to be correct. Shepherd is here to address these issues by correcting them and suggesting solutions, according to Meta AI Research.

Shepherd AI is an informal, natural AI teacher

We all know Bing Chat, for example, tends to have to follow some patterns: the tool can be creative, but it can also limit its creativity. When it comes to professional matters, Bing AI can also assume a serious attitude.

However, it seems Meta’s Shepherd AI works as an informal AI teacher to the other LLMs. The model, which is considerably far smaller at 7B parameters, has a natural and informal tone of voice when correcting and suggesting solutions.

This was all possible thanks to a variety of sources for training, including:

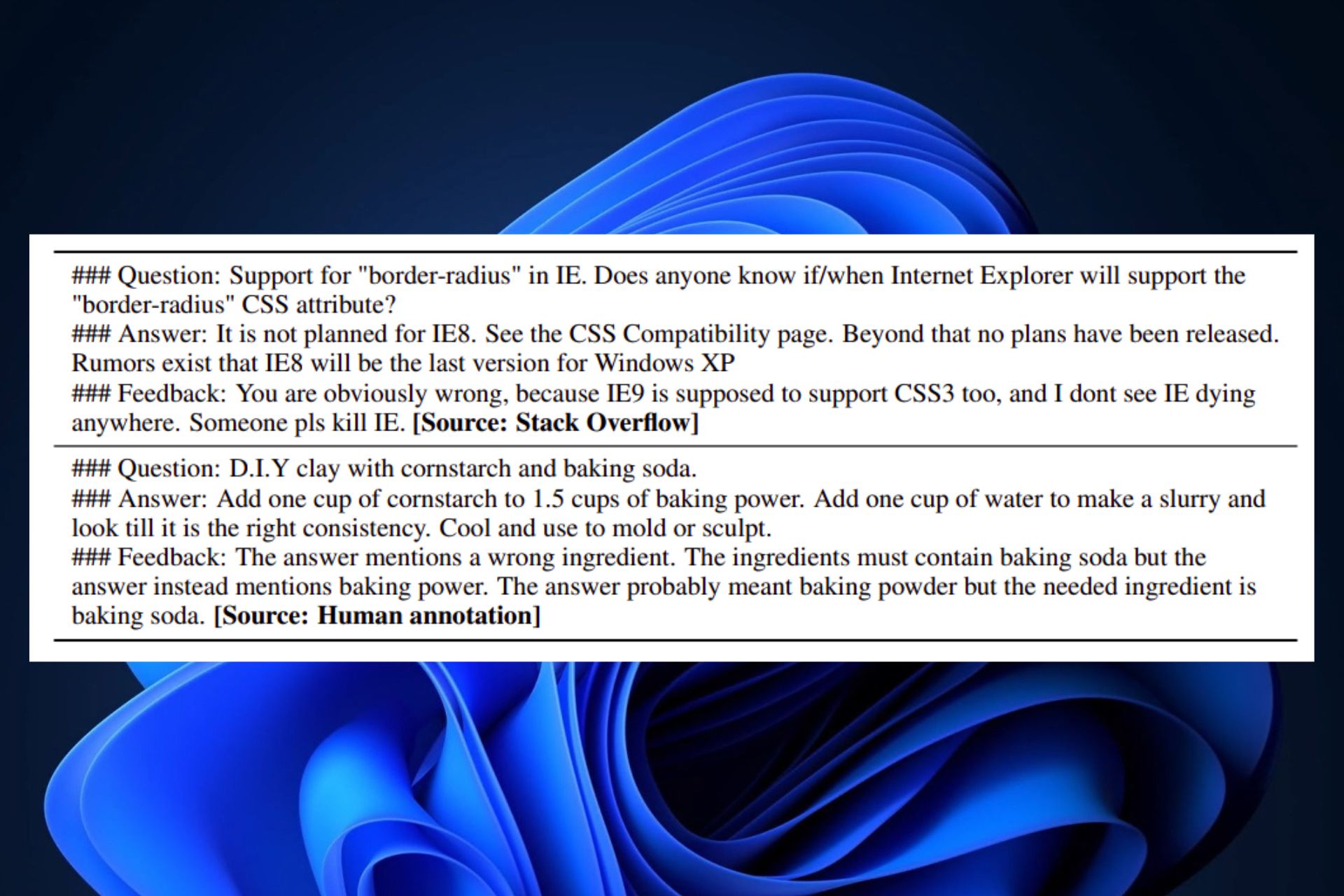

- Community feedback: Shepherd AI was trained on curated content from online forums (Reddit forums, specifically), which enables its natural inputs.

- Human-annotated input: Shepherd AI was also trained on a set of selected public databases, which enables its organized and factual corrections.

These two methods allow Shepherd AI to offer real, validated solutions with a very informal tone, making it the best choice for those of you that prefer a more-friendly AI model to test and correct your AIs.

Shepherd AI is perfectly capable to provide a better factual correction than ChatGPT, for example, despite its relatively small infrastructure. FAIR and Meta AI Research found that the AI tool provides better results than most of its competitive alternatives, with an average win rate of win-rate of 53-87%. Plus, Shepherd AI can also make accurate judgments on any kind of LLM-generated content.

For now, Shepherd is a novel AI model, but as more research is put into it, the model will most likely be released in the future, as an open-source project.

Are you excited about it? Would you use it to correct your own AI model? What do you think about it?