The chatbot used in Robert F. Kennedy Jr's campaign vanished

Third-party chatbots can bypass vulnerabilities and security measures and cause more harm than good

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

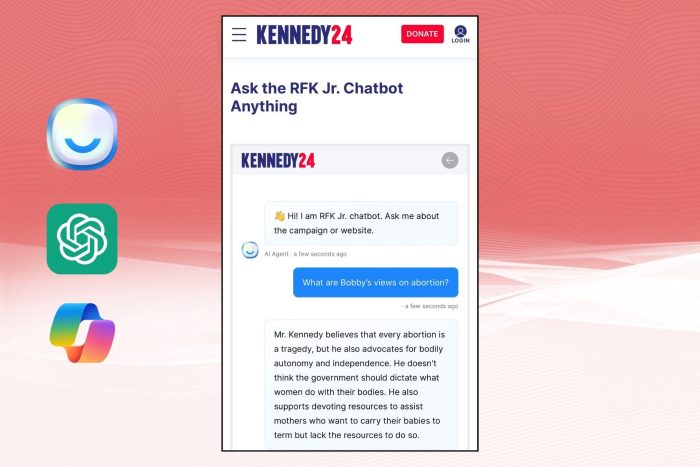

Robert F. Kennedy Jr candidates independently to become the next United States president. However, his campaign is using various unorthodox methods, including a chatbot. In addition, the data used to train the AI contains information based on Kennedy’s data. Furthermore, a spokesperson said the chatbot’s primary purpose was to be an interactive FAQ.

Microsoft claims that using Azure OpenAI Services in Kennedy’s campaign chatbot doesn’t violate any rules. However, the chatbot is no longer available on his website and vanished overnight. So, there must be a reason for that.

Why is AI not trustworthy?

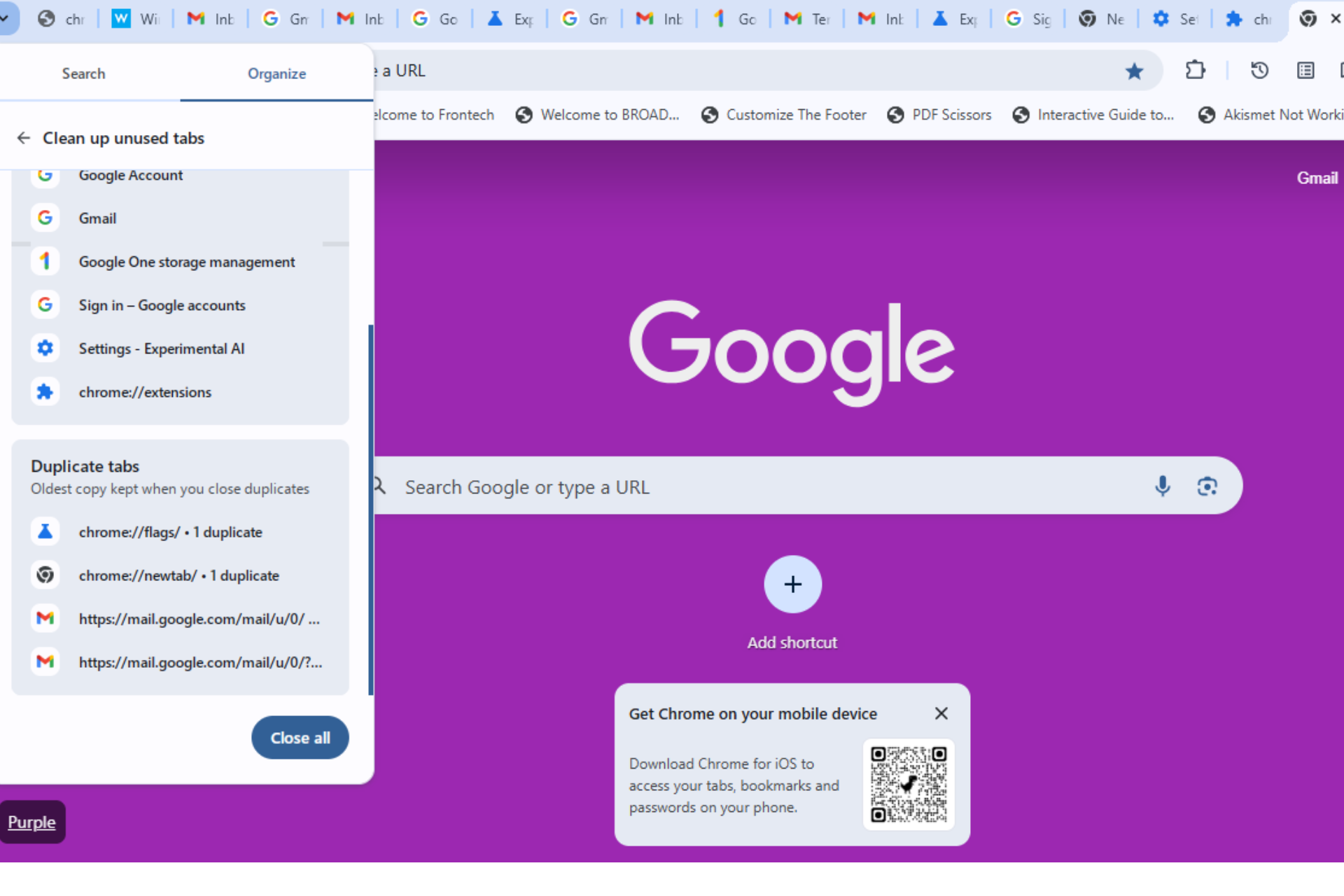

Unfortunately, AI is untrustworthy because it learns how to answer our questions using data from available sources. Thus, by accessing third-party AI like LiveChatAI, it is possible to bypass its safety measures and influence AI’s responses. For example, the campaign chatbot used by Robert F. Kennedy Jr spread information based on conspiracy theories and personal opinions. So, the chatbot led to misinformation.

According to Wired, if you tried to learn how to register to vote, the bot would give you a link to Kennedy’s We the People Party. However, Kennedy’s campaign chatbot is one of many that show that AI is unreliable. After all, OpenAI GPT-4 and Google Gemini released inaccurate answers in the past. In addition, some answers were mainly predictions.

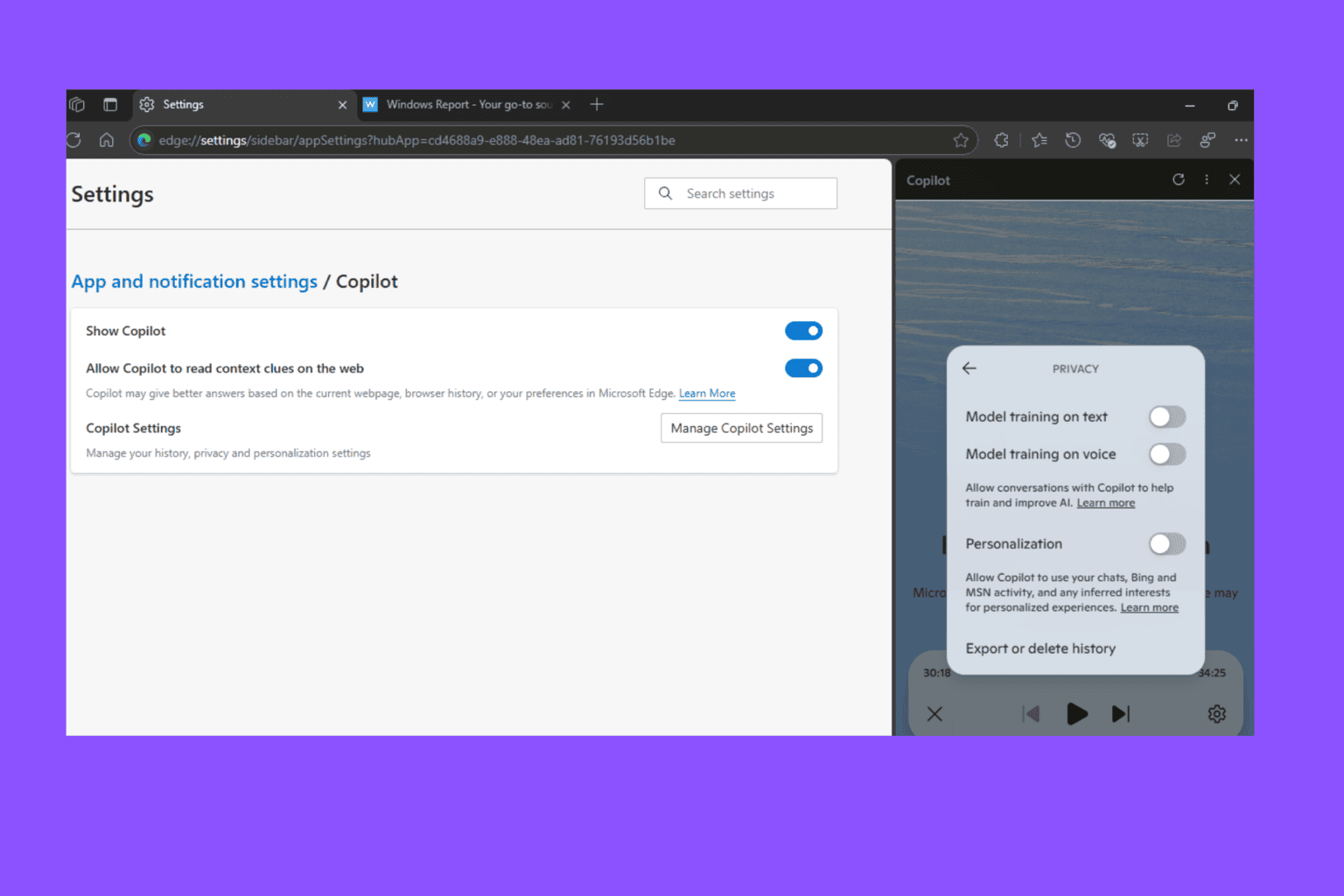

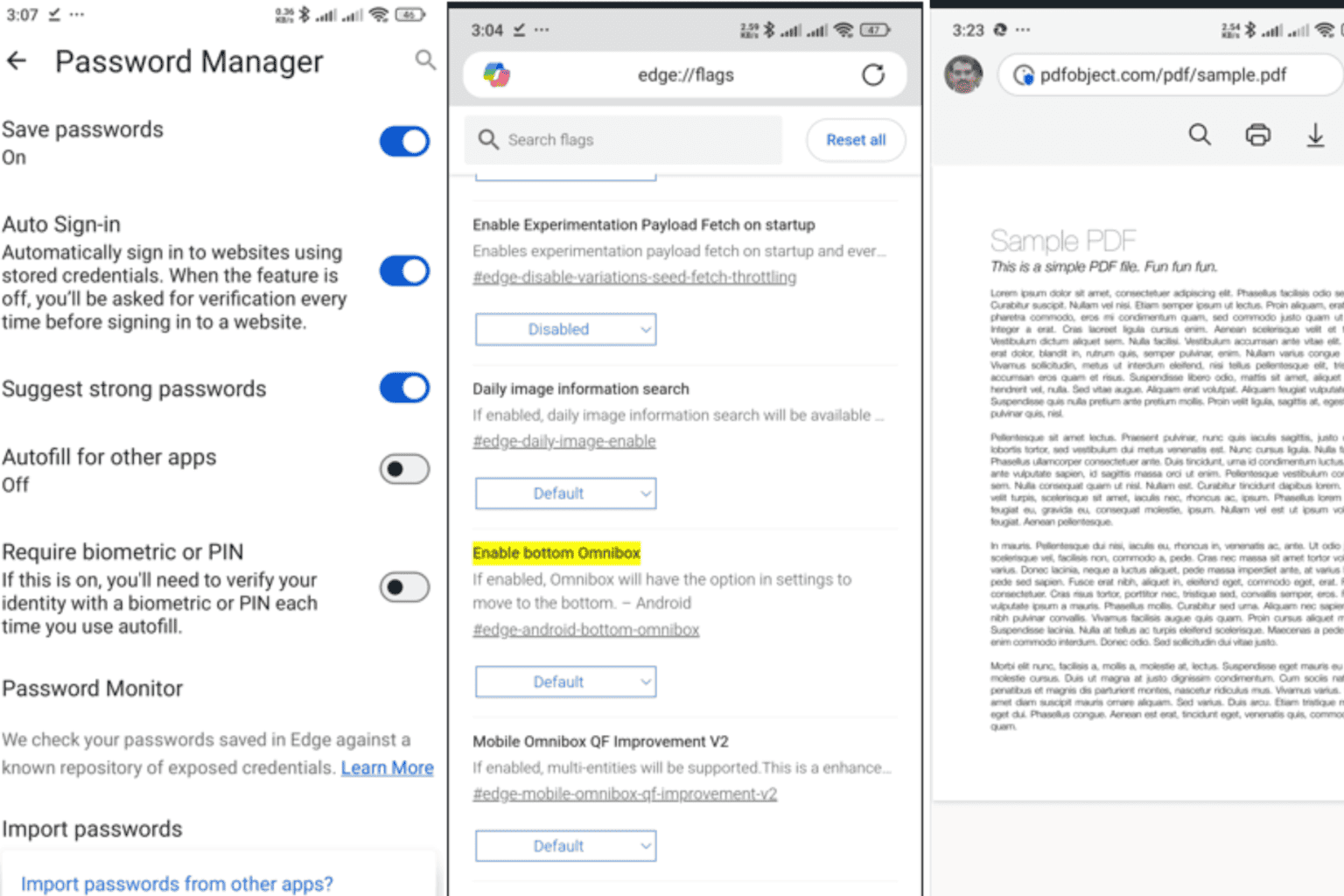

AI has some limitations regarding voting and politicsdue to the lack of data. After all, large language models (LLMs) use their database to answer our questions. Additionally, they might need more newer data due to copyright. Furthermore, Microsoft and OpenAI are sued for copyright infringement because ChatGPT stole and used text directly from news reports without mentioning their authors, titles, or sources.

In a nutshell, you should only trust AI if you check the source afterward. In addition, Kennedy’s campaign chatbot shows that they are unreliable. Furthermore, it also makes us doubt the security of the AI. After all, we can use third-party bots to breach security measures. On top of that, we can exploit the chatbot’s vulnerabilities through prompts.

What do you think? Did you ever find a breach in the chatbot’s vulnerabilities through prompts? Let us know in the comments.

User forum

0 messages