VASA-1 could become the main generator for deepfakes that will make or break elections

The new generation tool is a potential security threat

3 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

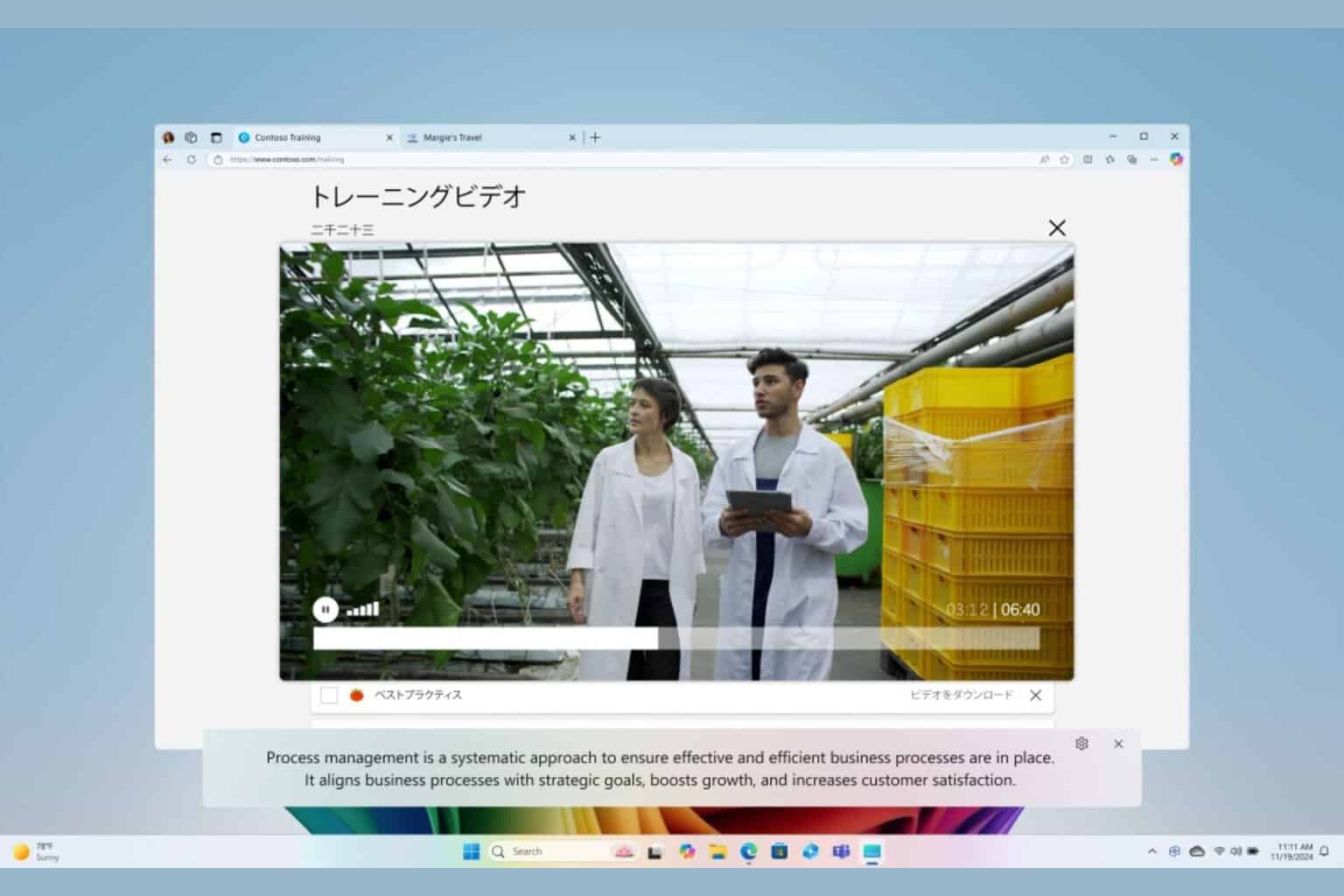

Microsoft’s VASA-1 is a new tool that allows you to create deepfakes with just a photo and an audio clip. After all, wrongdoers can use it in various ways. Fortunately, at the moment, the framework is not accessible because developers are still researching it.

According to Microsoft, Russian and Chinese hackers use similar tools to VASA-1 to generate complex deepfake videos. Through them, they spread misinformation about existing socio-political problems to influence U.S. elections. For instance, one of their methods is to create a fake video of a less-known politician talking about pressing matters. In addition, they use phone calls generated with AI to make it more credible.

What can threat actors do with VASA-1 and similar tools?

Threat actors could also use VASA-1 and tools like Sora AI to create deepfakes featuring celebrities or public people. As a result, cybercriminals could hurt the reputation of the VIPs or use their identity to scam us. In addition, it is possible to use video-generating apps to promote fake advertising campaigns.

It is possible to use VASA-1 to create deepfakes of experts. For instance, a while ago, an advertising company generated a video of Dana Brems, a podiatrist, to promote fake practices and products, such as weight loss pills. Additionally, most threat actors doing this type of fraud are from China.

Even if the deepfakes generated with VASA-1 are complex, you don’t need advanced knowledge to use it. After all, it has a simple UI. So, anyone could use it to generate and steam qualitative videos. Besides that, all others need is an AI voice generator and a voice message. So, others might use it to create fake court evidence and other types of malicious content. Below you can find the post of @rowancheung showcasing it.

Are there any ways to prevent VASA-1 deepfakes?

VASA-1 shows how much AI grew in a few years, and though it feels a bit threatening, officials set new rules in motion. For example, we have the EU AI ACT, the first regulation of Artificial Intelligence, which bans threatening models. Furthermore, Microsoft ensures us that VASA-1 won’t be available too soon. However, it exceeded its training and accommodated new features.

Unfortunately, the existence of VASA-1 shows that it is possible to create an AI generative tool capable of creating deepfakes. So, experienced threat actors could make their own malicious version.

Ultimately, we won’t be able to create deepfakes with VASA-1 too soon. However, this technology has the potential to become a real security threat. So, Microsoft and other companies should be careful when testing it to avoid leaving vulnerabilities. After all, nobody wants another AI incident.

What are your thoughts? Are you afraid that someone could use a deepfake generator against you? Let us know in the comments.

User forum

0 messages