Azure OpenAI Service gain GPT-4 Turbo and GPT-3.5 Turbo

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Key notes

- Improved Function Calling

- JSON Mode

- Reproducible Output

- Preview

- 3x more cost effective

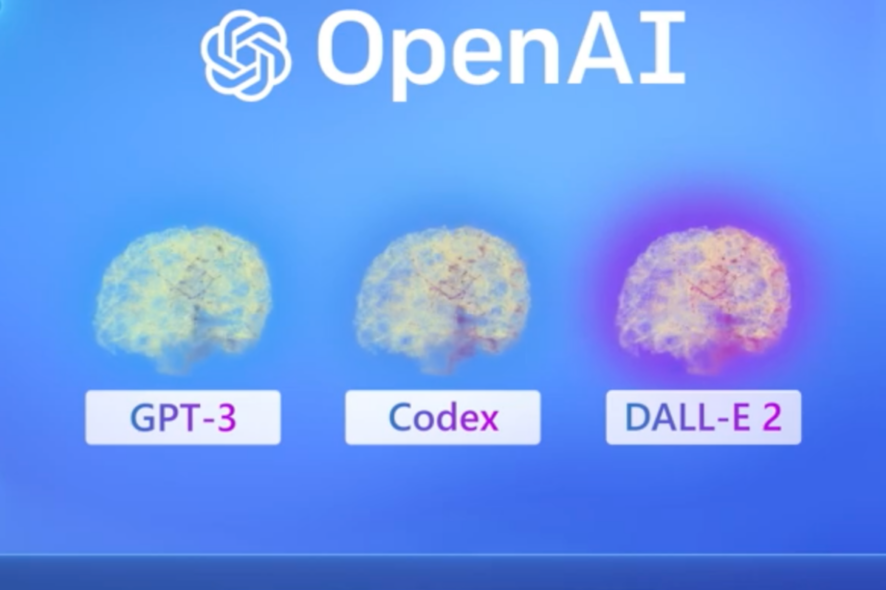

During Microsoft’s cloud developer conference, company CEO Satya Nadella mentioned the launch of updated generative AI models coming to Azure Open Services arriving soon.

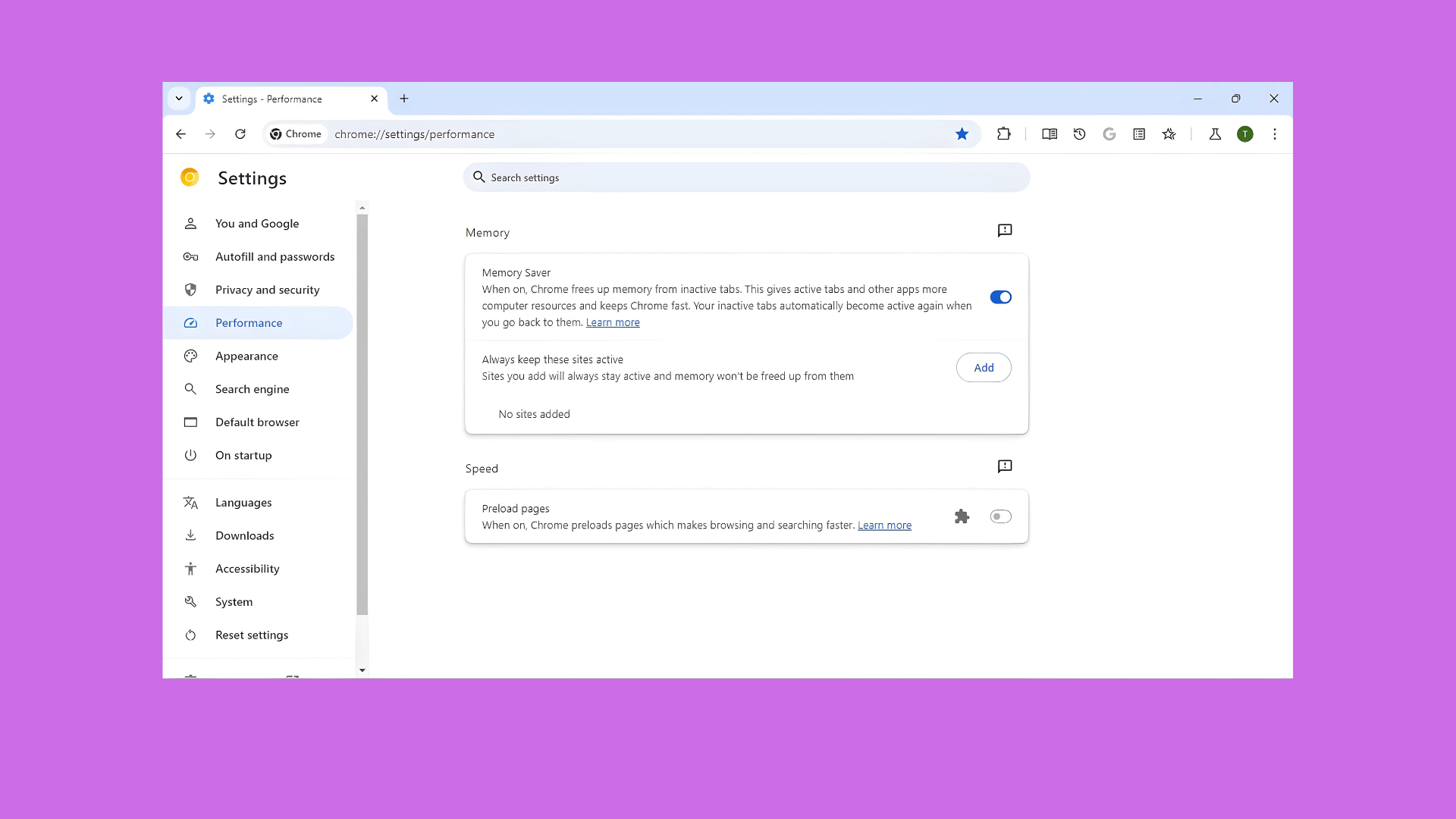

As of this week, Azure OpenAI Service customers can now take advantage of the most advanced versions of OpenAI’s pre-generative models with GTP-4 and GPT-3.5 Turbo 1106.

Microsoft launched the new models to previous existing markets while also opening its channels to three new regions that include Norway East, South India, and West US. Azure OpenAI Services are now available in a total of fourteen global regions.

The new models, particularly the GTP4 Turbo brings with it knowledge of world events up to April 2023 as well as 128L context windows to allow developers to create custom data inputs that can now leverage Retrieval Augmented Generation (RAG).

As for the token prices for the new models, GTP-4 Turbo pricing is $0.01/1000 tokens for input and $0.03/1000 tokens for output. Based on the input and output models, GPT-4 turbo for Azure OpenAI Services is “3x more cost effective for input tokens and 2x more cost effective for output tokens” when compared to the regular GPT-4 models being used now.

Beyond the price improvements, there are also ameliorated features such as function calling with GPT-4 Turbo enabling multiple function and tool calls in parallel to make applications more efficient. GPT-4 also introduces JSON Mode to correctly formatted JSON output. Reproducible output is also made possible and brings probabistically outcomes.

As for GPT-3.5 Turbo 1106, it brings many of the same updated features as GPT-4 Turbo but will also become the default Turbo model with 16K context window and new token input/output pricing.

As previously mentioned, both new GPT models are available in preview for most customers with Microsoft promising to make it more widely accessible with Provisioned Throughput.