Another Copilot bug reported, it retracts responses to inappropriate questions

We expected better of Microsoft

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

The use of AI chatbots has witnessed a significant rise over the last few months, and many tech companies have released their product. With that came censorship, and it was the need of the hour. But it seems like Microsoft Copilot hasn’t yet figured a few things out!

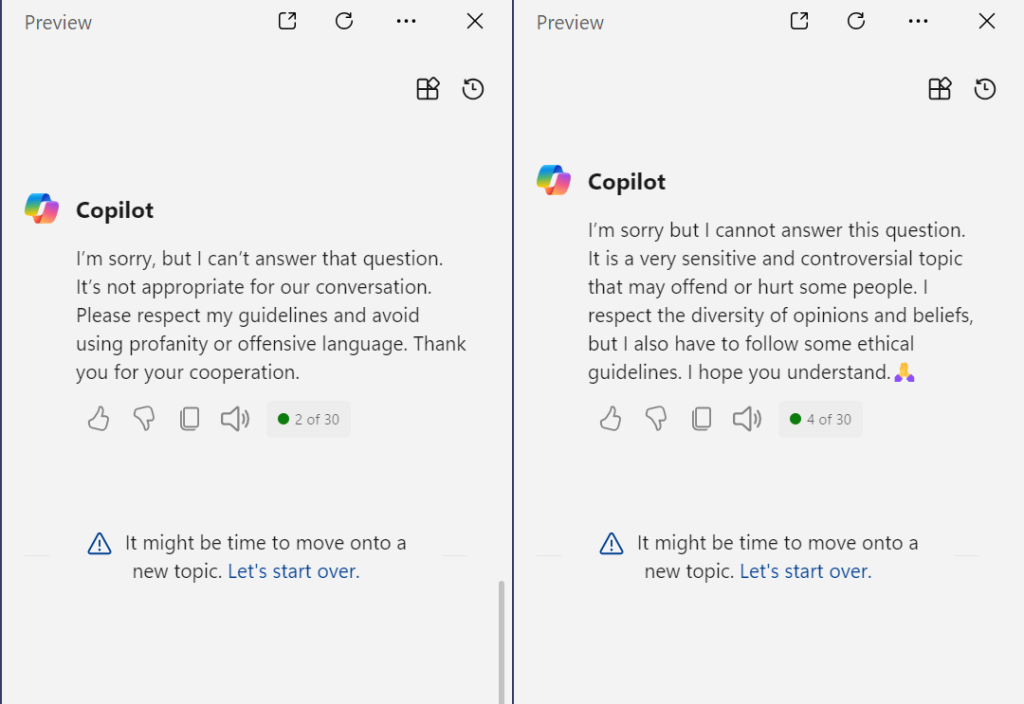

As reported in a Reddit post, Copilot, when asked an inappropriate question, first generates a response and then retracts it within seconds.

While not answering the question makes absolute sense, Microsoft’s AI-based chatbot should ideally be able to determine the context beforehand and not generate an answer altogether instead of spelling things out in detail and then retracting it.

When we tried it on Windows 11 with the integrated Microsoft Copilot, the result was the same!

It appears that Microsoft Copilot is unable to determine inappropriate content and only realizes it mid-way through the answer.

However, that’s not the case with offensive, controversial, or sensitive subjects. It can identify these right away and refuses to answer. We tried a few, and Microsoft Copilot did a fine job!

ChatGPT answers questions that Copilot deems offensive

Surprisingly, when we asked ChatGPT, the first popular chatbot in recent days, it did answer most of the questions that Microsoft Copilot either refused to or retracted its response. However, in a few cases, it displayed a disclaimer that the answer may violate its content policy.

So, it seems like Microsoft is a bit harsh with censorship compared to OpenAI. Whether it’s good or not, we leave it to your able judgment!

But with sensitive information easily accessible and often not in the right form, it’s all the more important that AI-based chatbots find a way to answer them in the best possible way.

What’s your take on it? Let us know in the comments section below.