GPT-4 with Vision is available, allowing JSON mode and function calling

Coders will be happy to analyze images inside GPT-4 Turbo

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

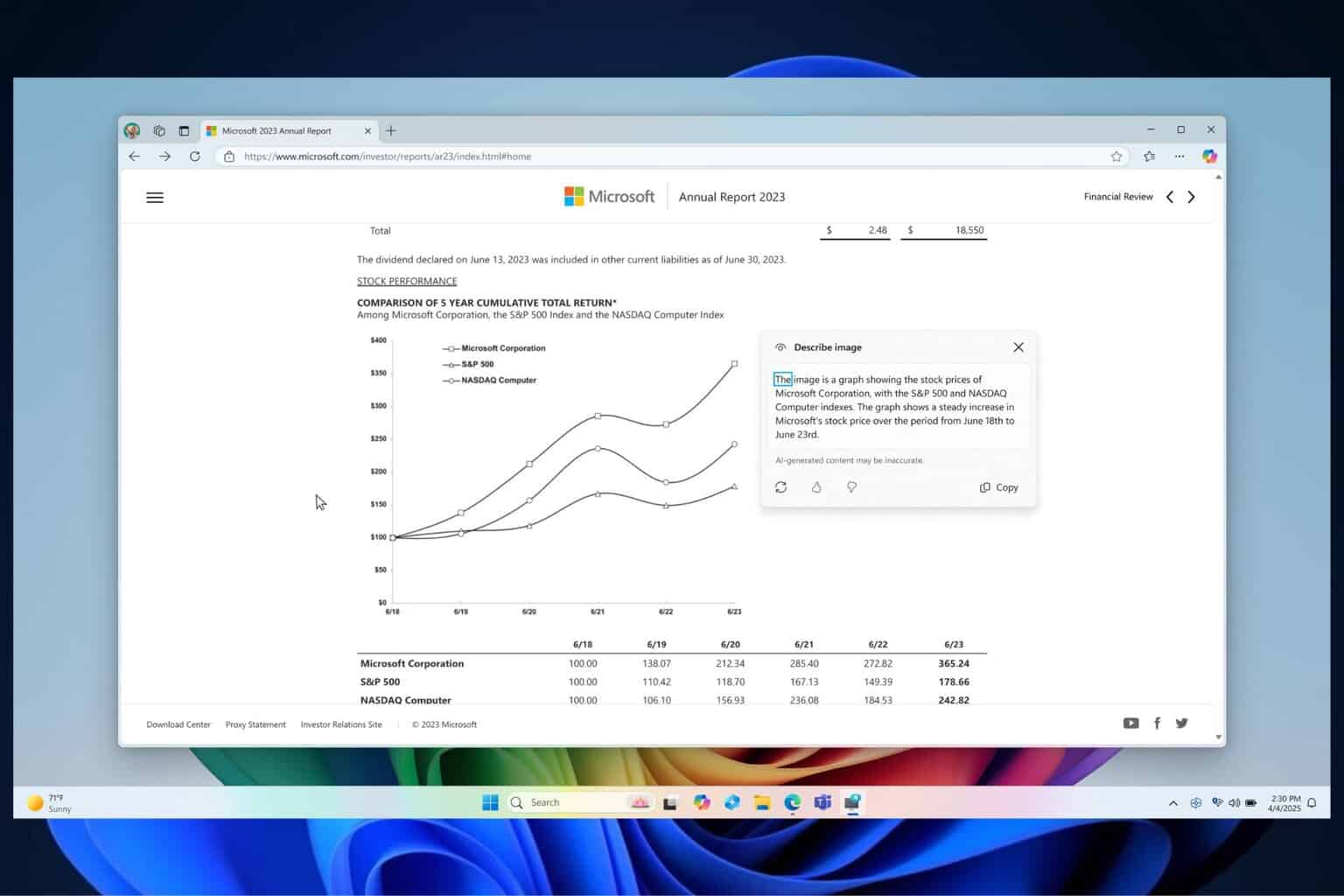

Recently, Open AI released GPT-4 to everyone in Copilot. Now, we have great news for coders. The LLM developer announced that they have launched GPT-4 Turbo with Vision, or GPT-4V in the API.

According to the documentation, GPT-4V comes with a JSON mode and function calling that will help coders with visual data processing. It also features 128,000 tokens in the context window, just like GPT-4 Turbo.

Basically, GPT-4V will be able to process images from a link, or by passing the base 64 encoded image directly in the request. Microsoft also provided a python code example of usage:

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-4-turbo",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "What’s in this image?"},

{

"type": "image_url",

"image_url": {

"url": "https://upload.wikimedia.org/wikipedia/commons/thumb/d/dd/Gfp-wisconsin-madison-the-nature-boardwalk.jpg/2560px-Gfp-wisconsin-madison-the-nature-boardwalk.jpg",

},

},

],

}

],

max_tokens=300,

)

print(response.choices[0])Open AI points out that there are some important limitations to GPT-4V. Within this example, the model is prompted about what are the contents in a certain image. The LLM understands the relationship between certain objects from the image but can’t provide further information about their location. You can ask it for instance what color is a pencil on the table but you’ll probably get no answer or a wrong one if you ask it to find the chair or the table.

The GPT-4V guide, also explains how to upload 64 encoded images or multiple images. Another interesting mention is that the model is not ready to process medical images such as CT scans. If there is a warning about that particular usage type, probably someone tried doing that for diagnosis.

You will also find a guide on how to calculate token costs. For instance, the high mode cost for a 1024 x 1024 square image in detail is 765 tokens.

What do you think about the new GPT-4 Turbo with Vision? If you’ve tried it, tell us about your experience in the comments section below.

User forum

0 messages