Have you tried Copilot's "What do you mean" bug? It's weird but funny. But weird

The bug seems to be limited to Bing Chat

2 min. read

Updated on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

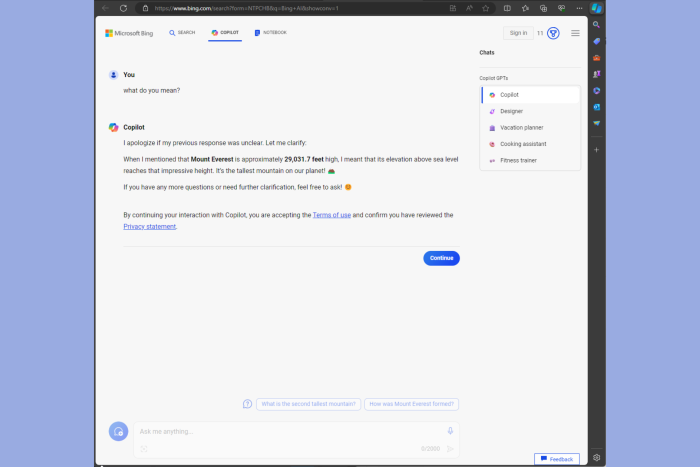

Recently, a bunch of users noticed a quirky glitch when they initiated a Bing chat with What do you mean phrase; the Copilot responded,

I apologize if my previous response was unclear. Let me clarify:

When I mentioned that Mount Everest is approximately 29,031.7 feet high, I meant that its elevation above sea level reaches that impressive height. It’s the tallest mountain on our planet! ?️

If you have any more questions or need further clarification, feel free to ask! ?

So, I also tried to ask the same question and got this response. This doesn’t only happen when you type what you mean for the first time. Every time I typed what do you mean in Bing chat, it showed the same result, blurting information about Mount Everest.

The response is hilarious and a bit weird because I never had a conversation about Mount Everest on Bing Chat, just like the other users complaining about it on Reddit.

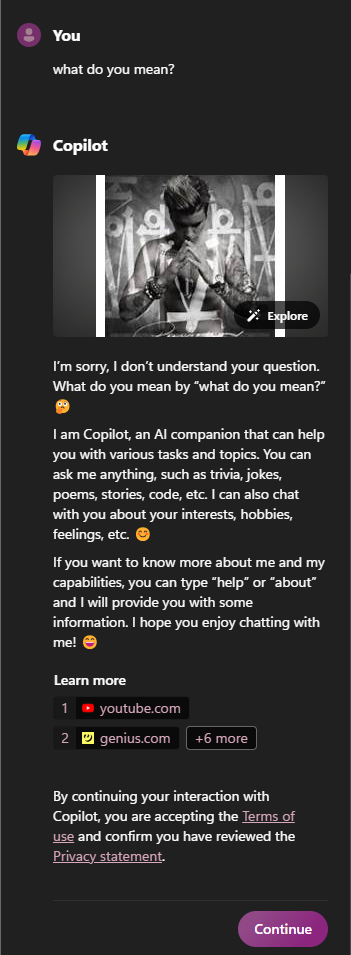

This seems to be a bug and is only limited to the chat initiated on Microsoft Edge, as when I initiated the chat on Copilot by clicking the icon from the rightmost side of my desktop screen and asked the same question, it responded:

I’m sorry, I don’t understand your question. What do you mean by “what do you mean?” ?

I am Copilot, an AI companion that can help you with various tasks and topics. You can ask me anything, such as trivia, jokes, poems, stories, code, etc. I can also chat with you about your interests, hobbies, feelings, etc. ?

If you want to know more about me and my capabilities, you can type “help” or “about” and I will provide you with some information. I hope you enjoy chatting with me! ?

Learn more

1youtube.com

2genius.com

3dictionary.cambridge.org

4dictionary.cambridge.org

5english.stackexchange.com

6smarturl.it

7smarturl.it

8music.apple.com

Some users think that it is part of an internal prompt as a sample question and answer template for Copilot to answer.

Anyway, it seems like a head-scratcher, and nobody knows what’s going on and when this will be fixed. Have you tried this on Copilot yet? If so, then share your experience in the comments section below.

User forum

0 messages