Microsoft and OpenAI defend their unapproved AI data harvesting

We might soon be able to remove our data from AI models

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Thirteen plaintiffs are suing Microsoft and OpenAI to end the unpaid and unapproved AI data harvesting. Furthermore, they accused Microsoft of using copyrighted data to train their AIs. However, the company didn’t deny their allegations. Microsoft considers that they can use public information at will. Also, OpenAI says it’s only possible to train AI with copyrighted content.

What does AI do with our data?

AI does harvest unapproved data. On top of that, researchers might use all resources available, especially since most data is public. Unfortunately, paywalls can’t stop them, especially since they have a lot of funding. Furthermore, Microsoft and OpenAI harvested our data for years.

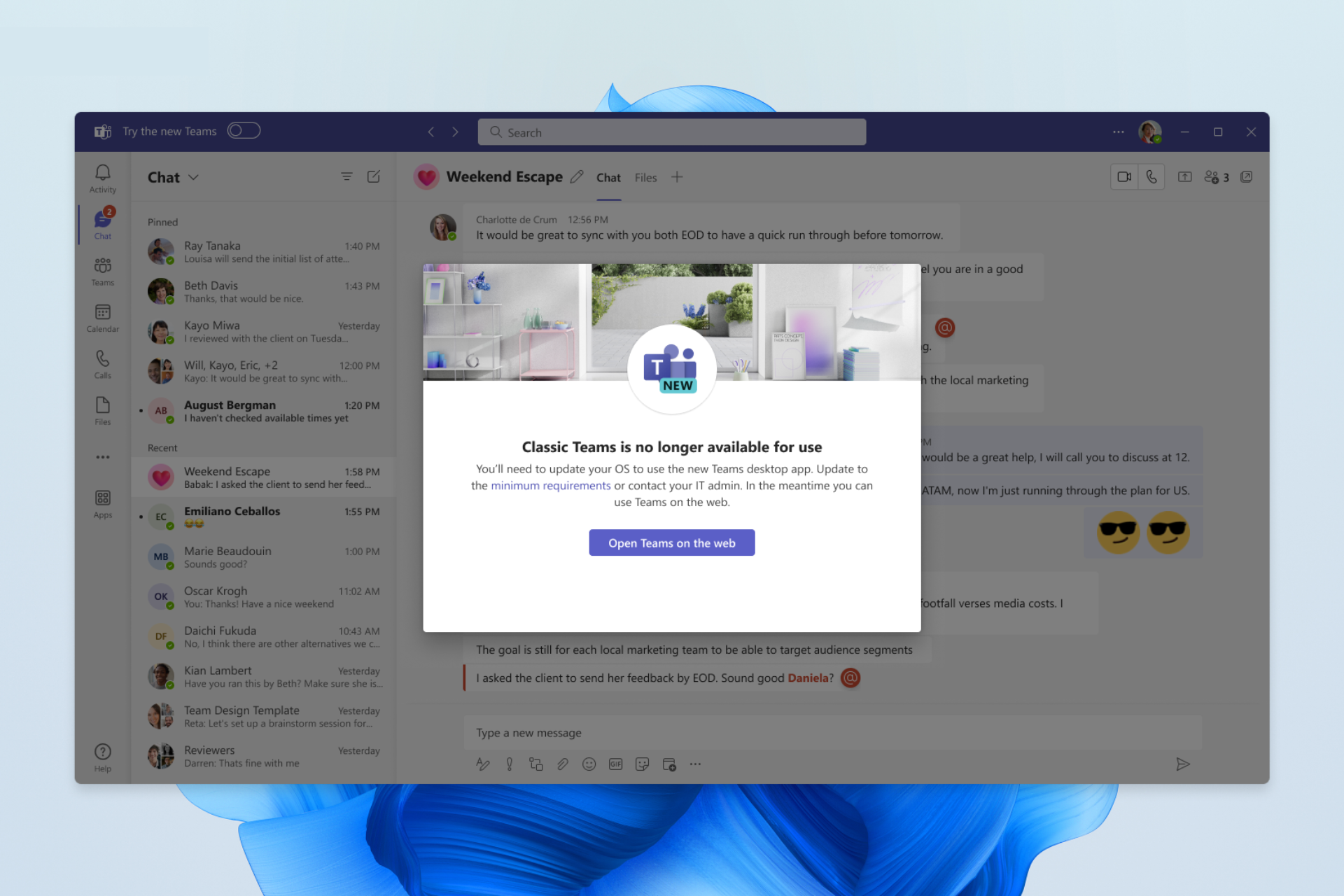

According to a privacy claim from September 2023, Microsoft and OpenAI conduct unapproved AI data harvesting through their products. Some of the mentioned applications are Spotify, Snapchat, Stripe, Slack, Microsoft Teams, and MyChart.

Moreover, the plaintiffs accuse Microsoft and OpenAI of sharing, storing, tracking, and disclosing our data. In addition, according to an article from The Register, the companies also fail to protect our private information. After all, a year ago, OpenAI needed to add filters to prevent their AI from sharing phone numbers.

Microsoft tried to dismiss the lawsuits by claiming that the plaintiffs had no plausible evidence of eavesdropping, scrapping, or intercepting data. In addition, they do not have any proof that Microsoft allegedly caused them harm. In addition, OpenAI agrees with Microsoft that there shouldn’t be any legal issues for using publicly available information to train their AI. Thus, they do not care if some of us consider their method unapproved data harvesting.

Ultimately, the thirteen plaintiffs consider Microsoft and OpenAI to be data thieves. So, they should be more responsible about how they train their AI. Furthermore, if the plaintiffs win, we might receive a legal right to remove our data from AI models. However, by ending the unapproved AI data harvesting, AI might suffer setbacks.

If you want to find out more, check out The Register’s article.

What are your thoughts? Who do you side with? Let us know in the comments.