Opera will be the first browser to use AI LLMs directly on your PC

Opera is providing a choice on what LLM to use

3 min. read

Published on

Picture this: Opera is giving a boost to the game with on-device AI, bringing it into its browsers named Opera One and Opera GX. I’ve seen many changes in browsers, yet this one is quite the jump. In April 2024, we reported that Opera brought local Large Language Models (LLMs) right inside the application. This was much more than just a test; it was a serious decision to provide AI abilities for many users on Windows, MacOS, and Linux devices.

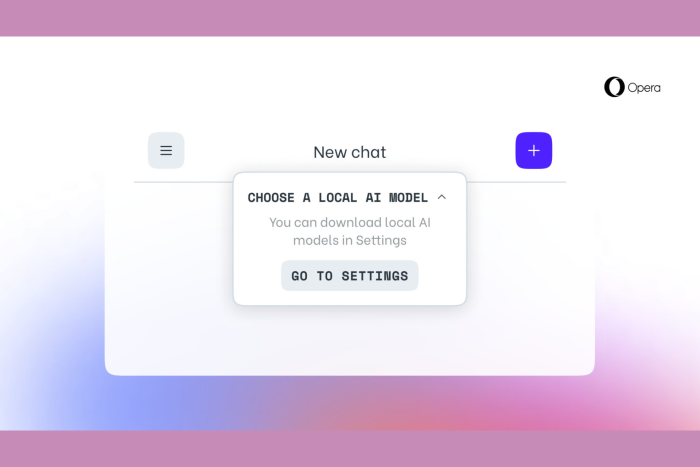

Opera is giving you a choice on which LLM to use

In the latest press release, Krystian Kolondra, EVP of Browsers and Gaming at Opera, talked about their idea to shift on-device AI from being in a test phase to being a standard feature of flagship browsers. This change isn’t only for staying updated with technology advancements; it’s about completely reshaping the landscape.

At first time when on-device AI was tested by Opera as an experiment, it became one part among many other features in their AI Feature Drops Program which introduced more than 2000 local LLM variants from over 60 model families locally. This change was important, because it allowed Opera to become the first big browser that had this characteristic already included.

But why do you and I care about this? It’s because of privacy and innovation. By handling AI tasks on our own devices, Opera reduces the requirement to send our personal information to faraway servers. Moreover, they always include more AI features. For instance, you can comprehend images via their browser AI called Aria. Just visualize putting up a picture and receiving thorough information about it directly from your browser sidebar.

Opera does not end its AI developments with software alone. They are also incorporating significant hardware and collaborations, such as a connection to Google Cloud and the NVIDIA H100 card-powered AI data cluster in Iceland. It is noteworthy that this cluster operates exclusively on renewable energy sources and ranks among the top supercomputers globally.

However, the most important aspect in Opera’s AI approach is the fact that you can choose the LLM of your choice, it doesn’t force you to use ChatGPT, LLama, or any other. You may actually pick the one that suits your needs best. So, while Edge is stuck on Copilot and Chrome will be linked to Gemini, Opera will be a strong alternative in this department.

What do you think about Opera’s Ai developments? Let’s discuss in the comments below.

User forum

0 messages