Working with video? Here's why the new Sora AI should scare you

AI might be threatening the advertising industry, but not yet

4 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

The inevitable has happened! Open AI has unveiled Sora AI, a new model that creates video from text, and the results are stunning.

Don’t panic just yet because the new LLM is only making one minute clips, but the developer is testing it right now.

Specifically, we train text-conditional diffusion models jointly on videos and images of variable durations, resolutions and aspect ratios. We leverage a transformer architecture that operates on spacetime patches of video and image latent codes. Our largest model, Sora, is capable of generating a minute of high fidelity video. Our results suggest that scaling video generation models is a promising path towards building general purpose simulators of the physical world.

Open AI

How does Sora AI work?

The technical report from Open AI is a specialized read, but basically, Sora AI is turning visual data of all types into a unified representation that enables large-scale training of generative models.

If you see the video above, you definitely can’t tell that it’s AI-generated. All the textures, skin, and face look natural, even though the clip is flooded with detail and objects.

Open AI uses video patches instead of text tokens to train their LLM:

At a high level, we turn videos into patches by first compressing videos into a lower-dimensional latent space, and subsequently decomposing the representation into spacetime patches.

Open AI

Training an LLM with video requires large amounts of data to process. They are trying to reduce the dimensionality of the visual data while the network gets the raw video input and outputs a compressed video both temporally and spatially.

From the results, we understand that Sora AI is more than capable of delivering high-quality video. As we mentioned in the beginning, although now it’s limited to one-minute clips, in time, it will clearly be able to create commercials and, why not? whole episodes of your favorite show.

How will Sora AI change the industry?

It’s easy to see why Sora AI is met with excitement and anticipation, but many professionals working in the video industry are frowning right now, and for good reason.

As a marketing specialist, why would you spend a considerable budget to film a commercial in an exotic spot when you can create it on the computer in a couple of hours?

The advertising industry is a monster worth billions of dollars annually. Still, it feeds everyone in the video industry, including the copywriters, the filming crew, the director, the set, and many, many more specialized crews that we don’t even know are involved in the process.

The commercial/ads industry will probably change in time, but in the near future, the first who will be affected will be the royalty free video content providers.

Who will want to buy a short clip when they could create it in a few minutes with Sora AI?

Relax, the future is not that grim

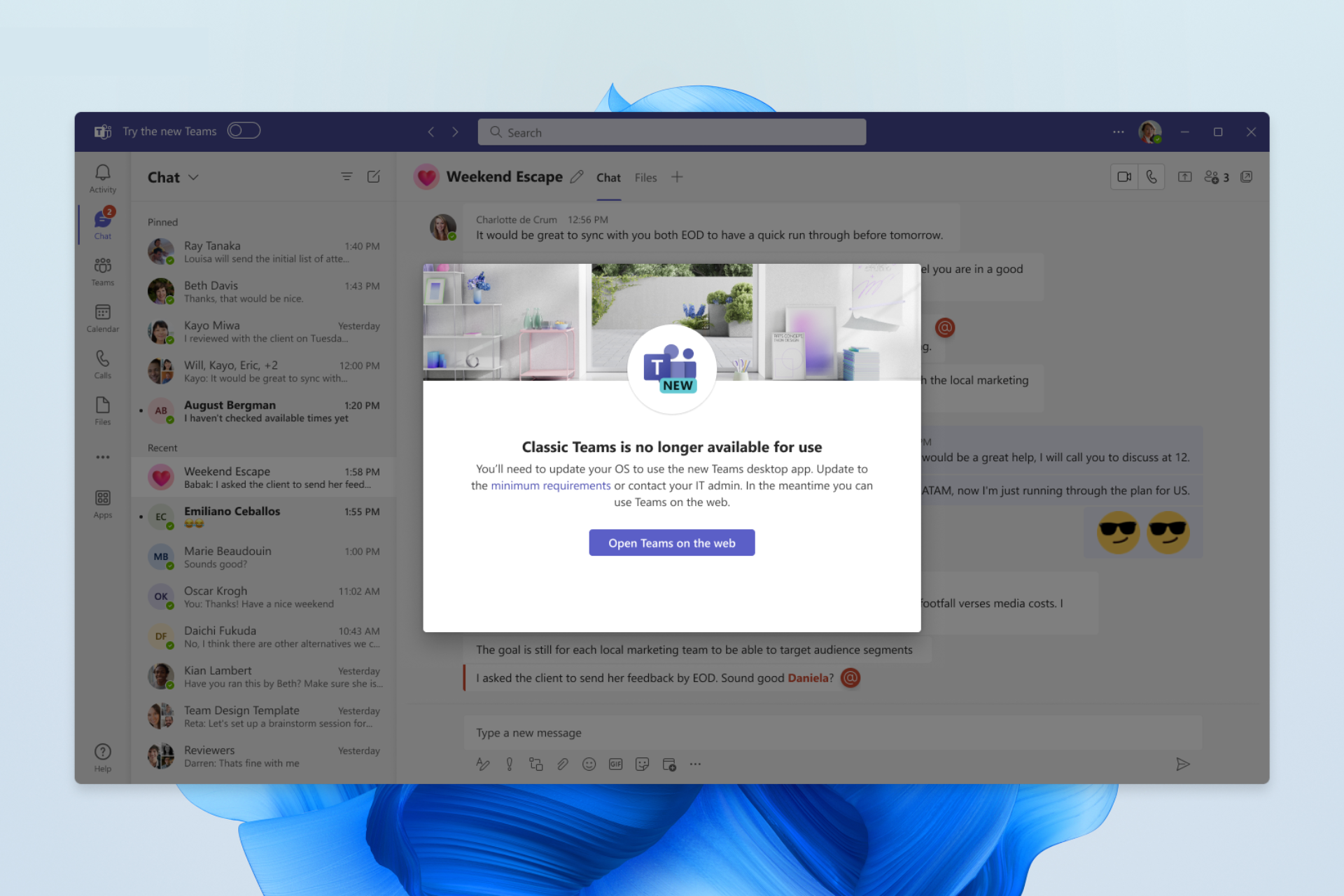

Now, everyone said that AI will replace the journalists and content writers, but that turned out to be a mess. Microsoft is creating its ow news reel, but they will use AI to train the journalists and provide them with more information, not write the news directly.

Right now, search engines are trying to identify and exclude AI-generated content because many times it turned out to be inaccurate and hallucinating. You still need human journalists to discern right from wrong, to make the correct connections and write a piece like this one.

It’s the same thing with images. Meta is exposing the AI-generated images, And the search engines will probably follow suit and flag the fake photos.

That’s probably going to happen with the videos as well. But right now, text-to-video and Sora AI are in their infancy and facing some real conceptual problems.

For instance, if a generated character bites from a cookie, there is a chance that the cookie remains intact. That’s not because Sora AI doesn’t know what a bitten cookie should look like but because it doesn’t correctly understand the matter subtraction.

Sora AI has a long way to go until it delivers the right results, and Open AI hasn’t released it to everyone yet. It’s in a limited tested preview for specialists from the industry and the developer didn’t announce any date for its public release.

What do you think about Sora AI and text to video? Let’s discuss it in the comments section below.