Nvidia Chat with RTX: this local AI tool is very powerful, but it'll eat a lot of storage

Get your PC ready for it.

3 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

Nvidia released Chat with RTX, an AI tool that runs locally on Windows PCs, including Windows 11, and while this is just an early demo version, it is a serious competition to the other similar AI tools out there, including Microsoft Copilot.

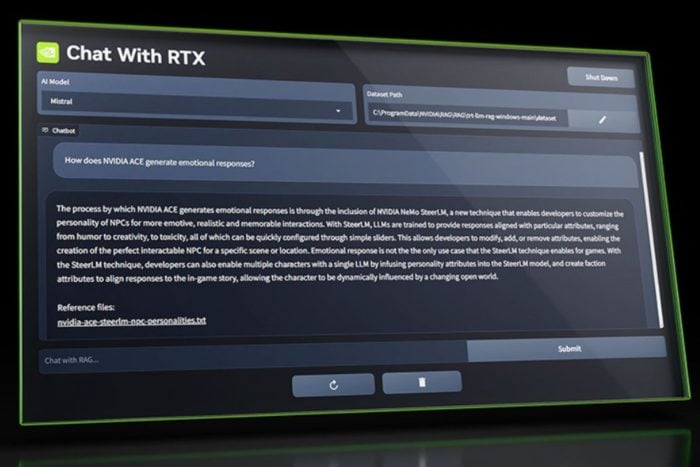

Chat with RTX runs locally on the Windows PC, meaning it doesn’t need to connect to the Internet to work, and it doesn’t rely on Cloud servers either. The tool lets Windows users choose from two LLMs (Lllama and Mistral), and upload different documents in different formats (docs, videos, notes, or other data), and then it will process these documents according to the user’s requests.

Chat With RTX is a demo app that lets you personalize a GPT large language model (LLM) connected to your own content—docs, notes, videos, or other data. Leveraging retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration, you can query a custom chatbot to quickly get contextually relevant answers. And because it all runs locally on your Windows RTX PC or workstation, you’ll get fast and secure results.

Nvidia

However, being a locally run AI, Chat with RTX will need to be installed on your Windows PC first, and unlike Microsoft Copilot or Open AI’s ChatGPT, which can be run online, this tool will require some serious power to run.

You’ll need at least 8GB of VRAM, and 16GB of RAM, and it only runs on Windows 11, for now, as you can see in its system requirements below:

| Platform | Windows |

| GPU | NVIDIA GeForce™ RTX 30 or 40 Series GPU or NVIDIA RTX™ Ampere or Ada Generation GPU with at least 8GB of VRAM |

| RAM | 16GB or greater |

| OS | Windows 11 |

| Driver | 535.11 or later |

But this is not enough, though. The Demo version of the Chat with RTX requires 35.1 GB of storage to be installed, so prepare to free some space for it. It’s worth mentioning that this is just a demo, so the actual app will most likely require more space, and more power altogether.

We weren’t able to test it out, just yet, but being a locally run AI, although it comes with some advantages, it will have a lot of disadvantages, as well: notably, these kind of AI tools don’t remember context, as it can’t be stored in the cloud, so users might find it frustrating.

However, considering this is just a demo, maybe Nvidia will somehow twitch the model to create a local folder where it can store the context.

We’ll see. But it can be a good option for those who want something different from the regular Copilot or ChatGPT. However, get ready: Nvidia Chat with RTX will use your PC’s resources.

You can download it from here.

User forum

0 messages