External Monitor is Not Using Your GPU? How to Force it

Make sure the monitor is fully compatible with your PC to avoid issues

3 min. read

Updated on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

Key notes

- Make sure you have the latest graphics drivers installed in order to avoid issues with external monitors.

- Setting your dedicated graphics card as the default processor can help with this issue.

Many use external monitors with their laptops because they offer more space and better quality. However, many users reported that an external monitor not using GPU.

Unfortunately, this isn’t the only issue, and many reported that a second monitor is not detected on their PC.

This can be a problem and affect your performance, so it’s important to fix it, and this guide will show you the best ways to do it, so let’s get started.

Why is my monitor not using GPU?

- The monitor isn’t compatible with HDMI standards or HDMI cables.

- Dedicated graphics isn’t set as the default graphics processor.

Do external monitors use GPU?

On laptops, integrated graphics render the user interface and other graphics components. This means that integrated graphics will be used on external monitors as well.

Only if you start a demanding application, such as a video game, will your laptop be forced to switch to a dedicated GPU.

After doing that, it doesn’t matter if the game is displayed on an internal or external display, it will run on your dedicated GPU.

What can I do if an external monitor is not using GPU?

Before we start, there’s one thing you need to check:

- Make sure that your cables and ports are compatible with your monitor.

1. Reinstall the graphics driver

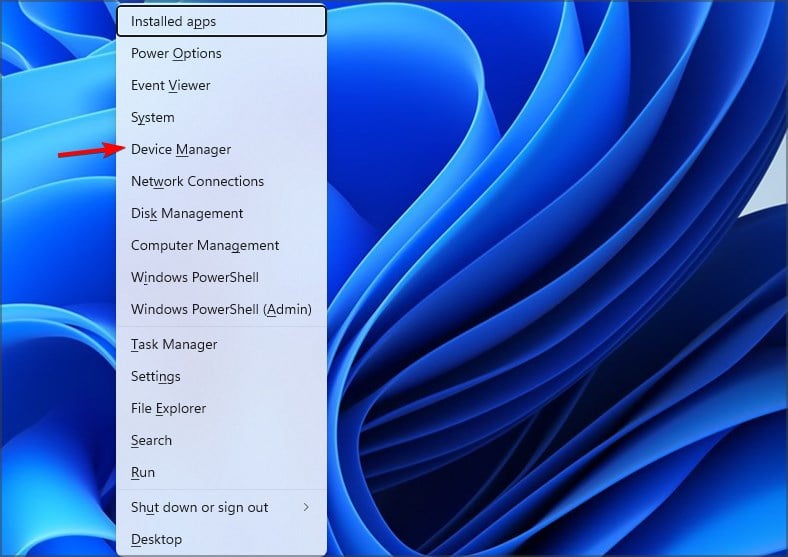

- Press Windows key + X and choose Device Manager.

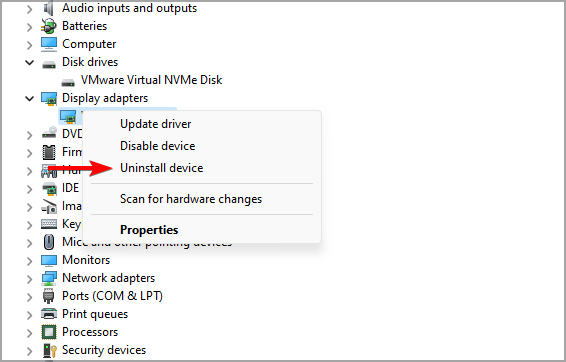

- Locate your graphics card. Right-click it and choose Uninstall device.

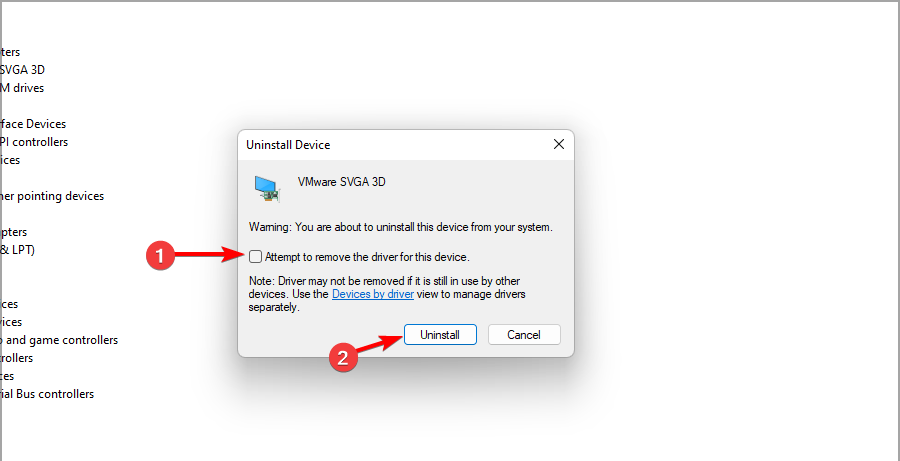

- If available, check the Remove driver software for this device and click Uninstall.

After the driver is uninstalled, you need to update the graphics driver and check if that solves the problem.

While uninstalling your drivers, some users recommend using Display Driver Uninstaller, so you might want to try that as well.

2. Set a preferred GPU

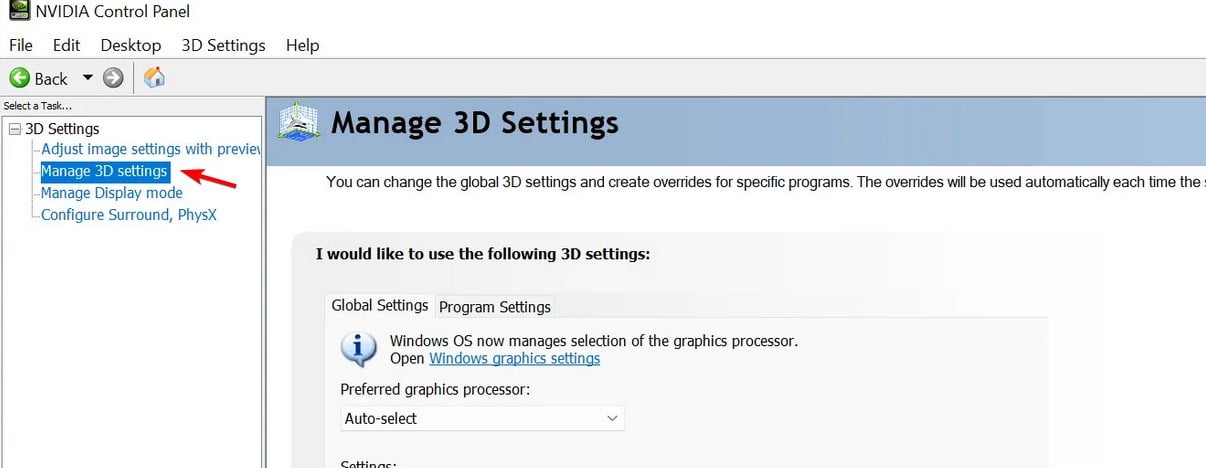

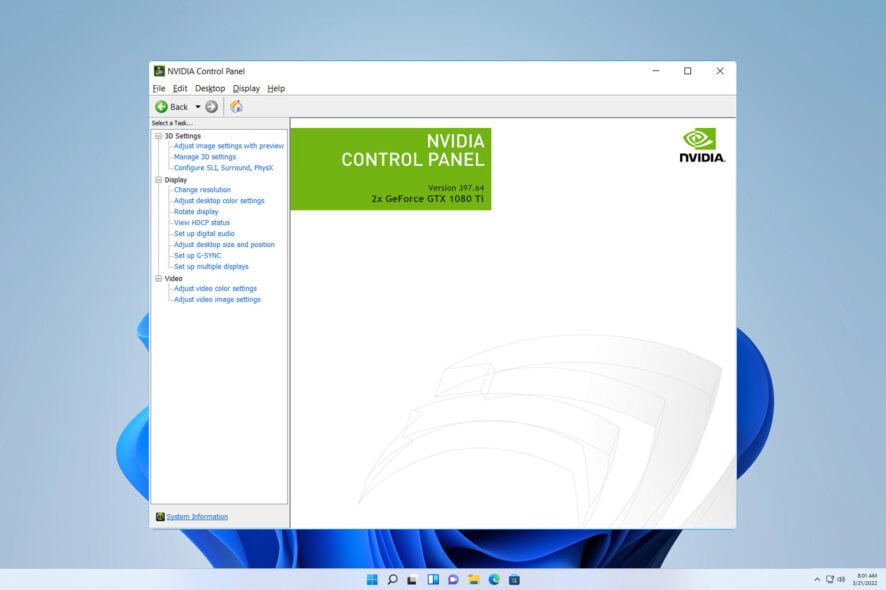

- Open Nvidia Control Panel.

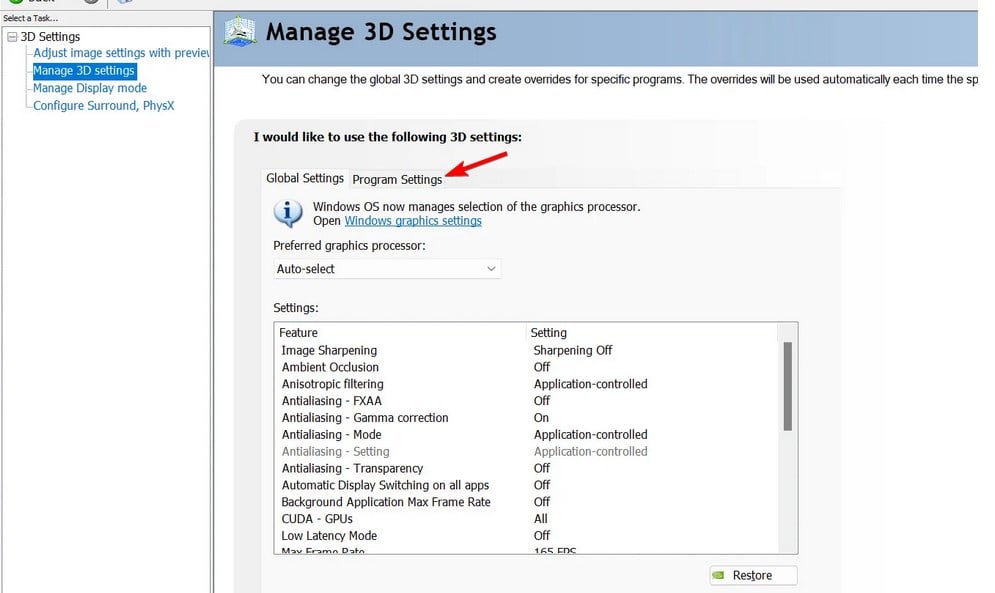

- Select Manage 3D Settings.

- Now go to Program Settings.

- Select the application that you want to use for your GPU.

- Set Preferred graphics processor to High-performance Nvidia processor.

- Start the application and move it to the external display.

As you can see, this is a minor issue, and it’s normal behavior since the external monitor not using GPU by default.

However, there are more serious issues you can encounter, one of them being the external monitor not being detected after sleep, however, we fixed that in a separate guide.

What method did you use to fix this issue, let us know in the comments below.