Microsoft's CoDi AI can do everything for you. Literally

CoDi is Microsoft's latest AI model.

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

Key notes

- CoDi takes text, audio, video, and image inputs and transforms them into content.

- This AI model will be of great use to people with disabilities.

- CoDi is a project that uses AI to enhance human-computer interaction.

Microsoft is really at the forefront of AI. The Redmond-based tech giant is integrating AI in every piece of software, including Windows 11. Copilot is already out in the Insider Program. Not to mention, Microsoft Teams is using AI to make work efficient. AI has found its way into Microsoft Store as well.

But this is not all. As you may know, Microsoft has also invested a lot of financial resources into AI research. LongMem, phi-1, Kosmos-2, and Orca 13B are just some of the AI models that will nonetheless have an impact on the way AI will exist from now on.

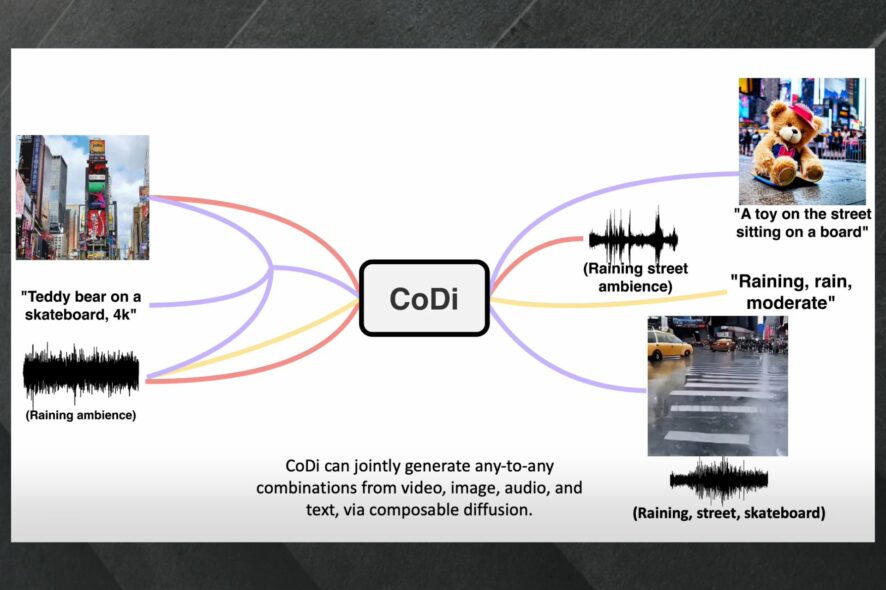

And now, Microsoft is coming up with another AI model, called CoDi. Apparently, CoDi is capable of anything creative. Literally anything. The model already has a demo video, made by the Microsoft Research team, which shows just how CoDi works.

CoDi is also part of Microsoft’s Project i-Code, a project that uses AI to enhance human-computer interaction.

Here’s what Microsoft says CoDi will do for you

CoDi stands for Composable Diffusion and the model was developed by a team from Microsoft Azure Cognitive Services Research in collaboration with the University of North Carolina at Chapel Hill.

It can literally generate anything from anything, out of all sorts of inputs. We’re talking about language, image, video, or audio, and any combination of these inputs.

CoDi takes these inputs, processes them, and generates multiple types of inputs simultaneously. You can see this in the demo video below.

In other words, CoDi will generate a contextual and realistic video with a prompt, an image, and an audio clip. Its usage will be highly needed by a lot of people.

The team that developed CoDi came up with a lot of use cases for the model. For example, people with disabilities will be able to create content far easier. Students can also use it to create engaging and informative presentations and projects.

The model can be used in computers to enhance user experience altogether. And if it’s released to the public, we will be able to create highly interactive content with just a few items at our disposal.

What do you think about it? Are you excited about CoDi? Let us know in the comments section below.

User forum

0 messages