Microsoft mistakenly leaks 30K+ internal employee messages, passwords, secret keys

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team. Read more

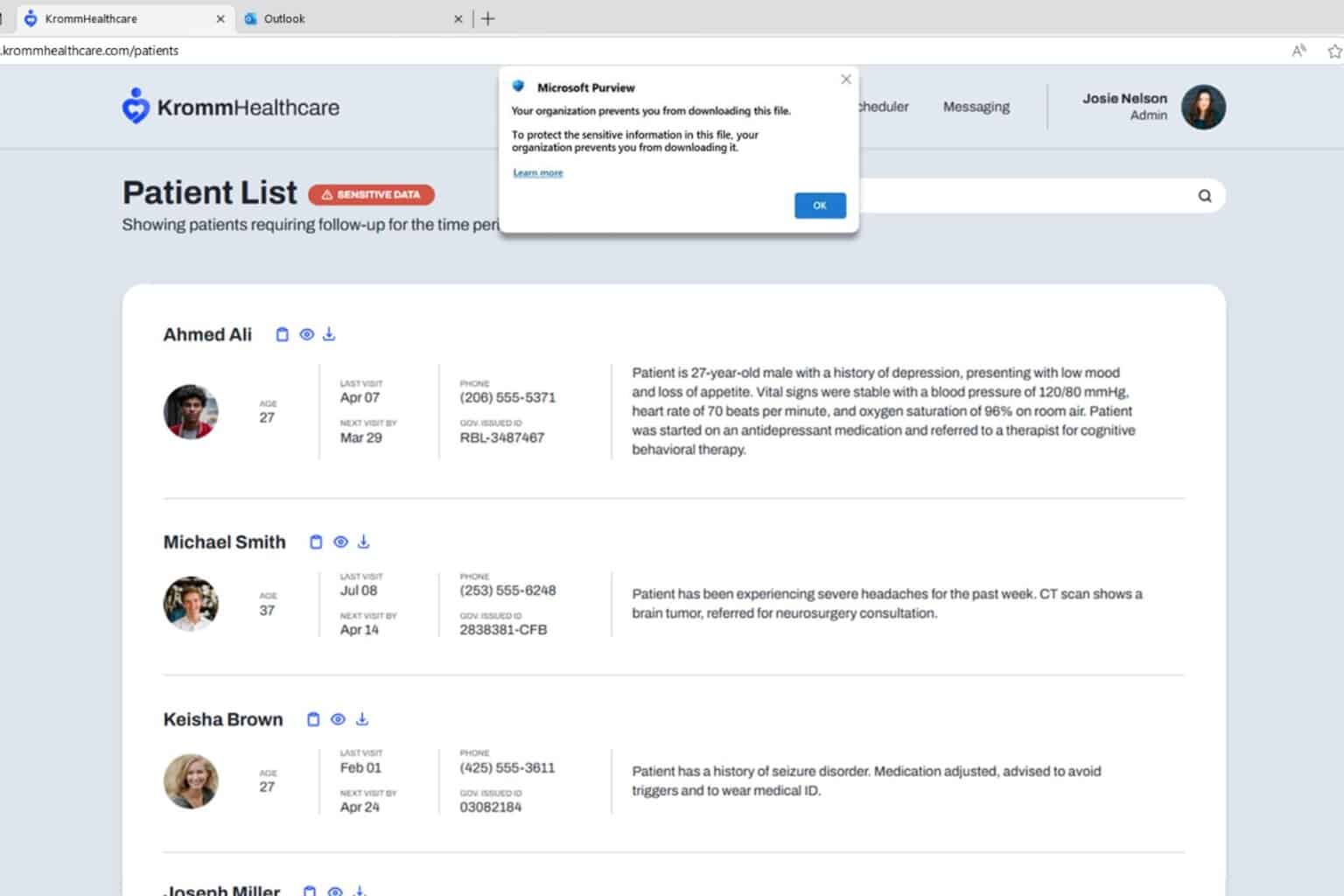

Microsoft AI researchers accidentally made a lot of private information, like passwords and secret messages, available to anyone who knew where to look on GitHub. This happened because they used a special link that gave people too much access to their data, according to data shared with TechCrunch by cloud security firm Wiz.

During its ongoing research, Wiz discovered that cloud-hosted data was accidentally exposed. In particular, Wiz identified a Microsoft GitHub repository linked to the company’s AI research division. The repository contained open-source code and AI models that could be used for image recognition, with users instructed to download the models from an Azure Storage URL.

The accidentally shared data included 38 terabytes of critical information. It contained personal backups from two Microsoft employees, passwords, secret keys, and messages from Microsoft employees.

The data was exposed due to a misconfigured shared access signature (SAS) token. Azure uses SAS tokens, a mechanism that allows users to create shareable links granting access to an Azure Storage account’s data.

The data has been exposed through a misconfigured URL since 2020. Wiz discovered that the URL allowed “full control” instead of “read-only” permissions. Anyone who knows where to look could delete, replace, or insert malicious content into the data.

Microsoft stated that no customer data was exposed, and no other internal services were at risk due to this issue.

This incident underscores the importance of robust security practices when handling sensitive data, especially in the context of AI research and open-source projects. It also highlights the need for ongoing monitoring and safeguards to prevent accidental data exposure.

User forum

0 messages